publications

2025

- VarDial

Large Language Models as a Normalizer for Transliteration and Dialectal TranslationMd Mahfuz Ibn Alam, and Antonios AnastasopoulosIn Proceedings of the 12th Workshop on NLP for Similar Languages, Varieties and Dialects Code here , Jan 2025

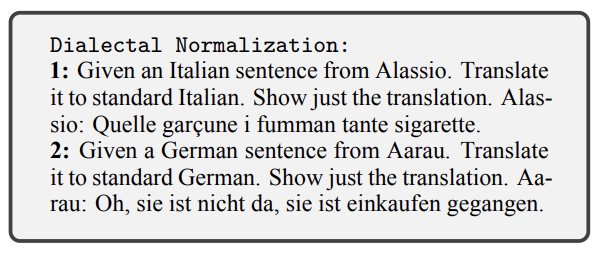

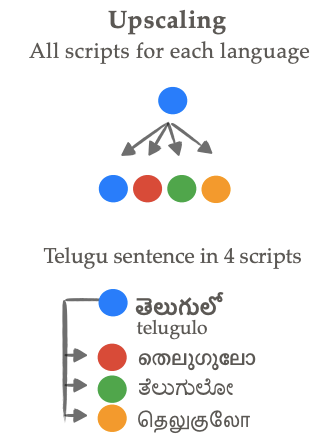

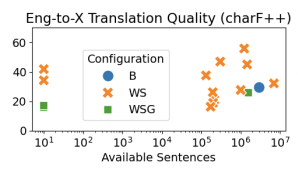

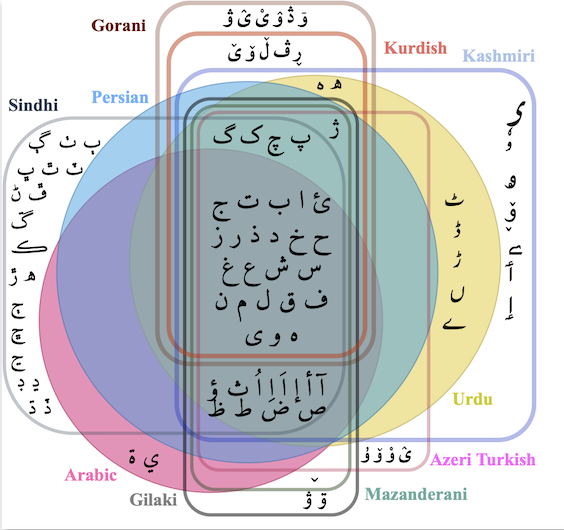

Large Language Models as a Normalizer for Transliteration and Dialectal TranslationMd Mahfuz Ibn Alam, and Antonios AnastasopoulosIn Proceedings of the 12th Workshop on NLP for Similar Languages, Varieties and Dialects Code here , Jan 2025NLP models trained on standardized language data often struggle with variations. We assess various Large Language Models (LLMs) for transliteration and dialectal normalization. Tuning open-source LLMs with as little as 10,000 parallel examples using LoRA can achieve results comparable to or better than closed-source LLMs. We perform dialectal normalization experiments for twelve South Asian languages and dialectal translation experiments for six language continua worldwide. The dialectal normalization task can also be a preliminary step for the downstream dialectal translation task. Among the six languages used in dialectal translation, our approach enables Italian and Swiss German to surpass the baseline model by 21.5 and 25.8 BLEU points, respectively.

@inproceedings{alam-anastasopoulos-2025-large, title = {Large Language Models as a Normalizer for Transliteration and Dialectal Translation}, author = {Alam, Md Mahfuz Ibn and Anastasopoulos, Antonios}, editor = {Scherrer, Yves and Jauhiainen, Tommi and Ljube{\v{s}}i{\'c}, Nikola and Nakov, Preslav and Tiedemann, Jorg and Zampieri, Marcos}, booktitle = {Proceedings of the 12th Workshop on NLP for Similar Languages, Varieties and Dialects}, month = jan, year = {2025}, address = {Abu Dhabi, UAE}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.vardial-1.5/}, pages = {39--67}, } - VarDialTesting the Boundaries of LLMs: Dialectal and Language-Variety TasksFahim Faisal, and Antonios AnastasopoulosIn Proceedings of the 12th Workshop on NLP for Similar Languages, Varieties and Dialects Code here , Jan 2025

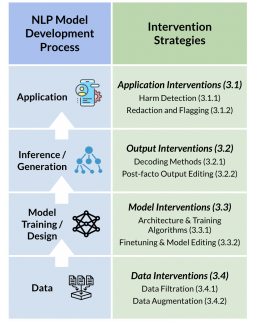

This study evaluates the performance of large language models (LLMs) on benchmark datasets designed for dialect-specific NLP tasks. Dialectal NLP is a low-resource field, yet it is crucial for evaluating the robustness of language models against linguistic diversity. This work is the first to systematically compare state-of-the-art instruction-tuned LLMs—both open-weight multilingual and closed-weight generative models—with encoder-based models that rely on supervised task-specific fine-tuning for dialectal tasks. We conduct extensive empirical analyses to provide insights into the current LLM landscape for dialect-focused tasks. Our findings indicate that certain tasks, such as dialect identification, are challenging for LLMs to replicate effectively due to the complexity of multi-class setups and the suitability of these tasks for supervised fine-tuning. Additionally, the structure of task labels—whether categorical or continuous scoring—significantly affects model performance. While LLMs excel in tasks like machine reading comprehension, their instruction-following ability declines in simpler tasks like POS tagging when task instructions are inherently complex. Overall, subtle variations in prompt design can greatly impact performance, underscoring the need for careful prompt engineering in dialectal evaluations.

@inproceedings{faisal-anastasopoulos-2025-testing, title = {Testing the Boundaries of {LLM}s: Dialectal and Language-Variety Tasks}, author = {Faisal, Fahim and Anastasopoulos, Antonios}, editor = {Scherrer, Yves and Jauhiainen, Tommi and Ljube{\v{s}}i{\'c}, Nikola and Nakov, Preslav and Tiedemann, Jorg and Zampieri, Marcos}, booktitle = {Proceedings of the 12th Workshop on NLP for Similar Languages, Varieties and Dialects}, month = jan, year = {2025}, address = {Abu Dhabi, UAE}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.vardial-1.6/}, pages = {68--92}, } - ALP

Towards Ancient Meroitic Decipherment: A Computational ApproachJoshua N. Otten, and Antonios AnastasopoulosIn Proceedings of the Second Workshop on Ancient Language Processing, May 2025

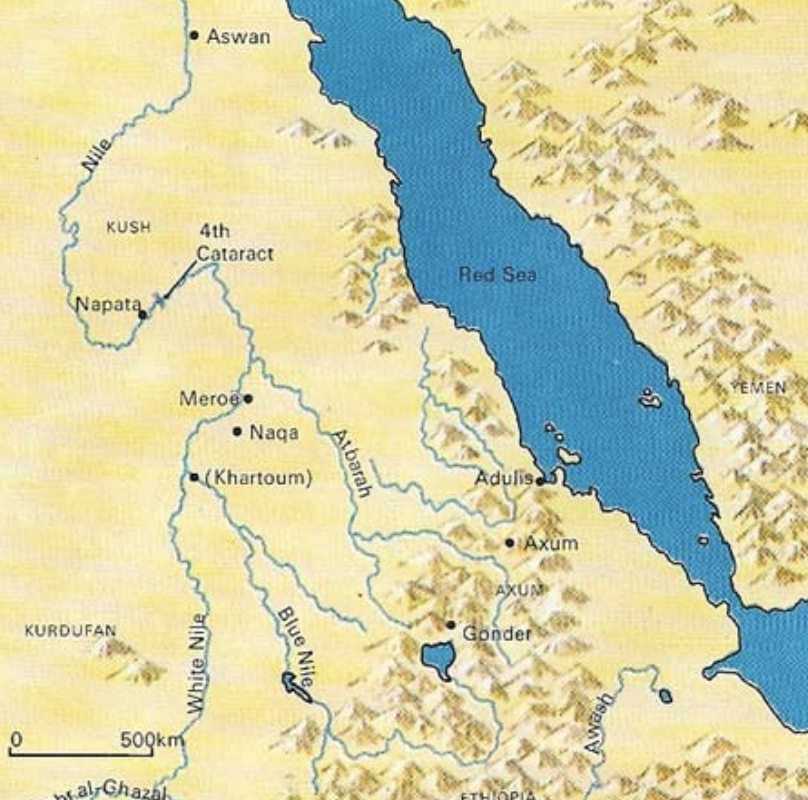

Towards Ancient Meroitic Decipherment: A Computational ApproachJoshua N. Otten, and Antonios AnastasopoulosIn Proceedings of the Second Workshop on Ancient Language Processing, May 2025The discovery of the Rosetta Stone was one of the keys that helped unlock the secrets of Ancient Egypt and its hieroglyphic language. But what about languages with no such ’Rosetta Stone?’ Meroitic is an ancient language from what is now present-day Sudan, but even though it is connected to Egyptian in many ways, much of its grammar and vocabulary remains undeciphered. In this work, we introduce the challenge of Meroitic decipherment as a computational task, and present the first Meroitic machine-readable corpus. We then train embeddings and perform intrinsic evaluations, as well as cross-lingual alignment experiments between Meroitic and Late-Egyptian. We conclude by outlining open problems and potential research directions.

@inproceedings{otten-anastasopoulos-2025-towards, title = {Towards {A}ncient {M}eroitic Decipherment: A Computational Approach}, author = {Otten, Joshua N. and Anastasopoulos, Antonios}, editor = {Anderson, Adam and Gordin, Shai and Li, Bin and Liu, Yudong and Passarotti, Marco C. and Sprugnoli, Rachele}, booktitle = {Proceedings of the Second Workshop on Ancient Language Processing}, month = may, year = {2025}, address = {The Albuquerque Convention Center, Laguna}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.alp-1.11/}, pages = {87--98}, isbn = {979-8-89176-235-0}, } - NAACL

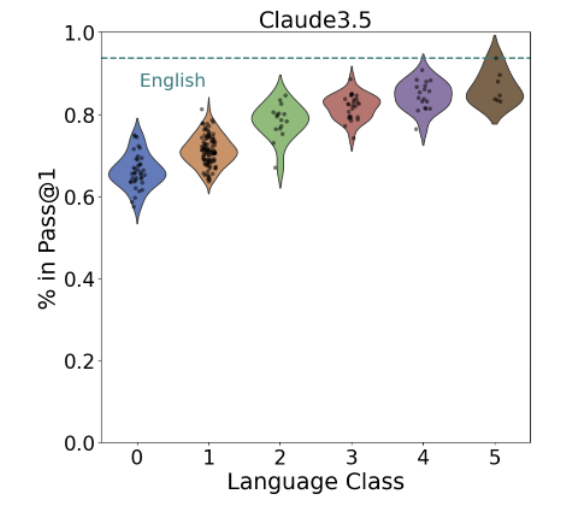

mHumanEval - A Multilingual Benchmark to Evaluate Large Language Models for Code GenerationMd Nishat Raihan, Antonios Anastasopoulos, and Marcos ZampieriIn Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers) Code here , Apr 2025

mHumanEval - A Multilingual Benchmark to Evaluate Large Language Models for Code GenerationMd Nishat Raihan, Antonios Anastasopoulos, and Marcos ZampieriIn Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers) Code here , Apr 2025@inproceedings{raihan-etal-2025-mhumaneval, title = {m{H}uman{E}val - A Multilingual Benchmark to Evaluate Large Language Models for Code Generation}, author = {Raihan, Md Nishat and Anastasopoulos, Antonios and Zampieri, Marcos}, editor = {Chiruzzo, Luis and Ritter, Alan and Wang, Lu}, booktitle = {Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers)}, month = apr, year = {2025}, address = {Albuquerque, New Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.naacl-long.570/}, pages = {11432--11461}, isbn = {979-8-89176-189-6}, } - NAACL

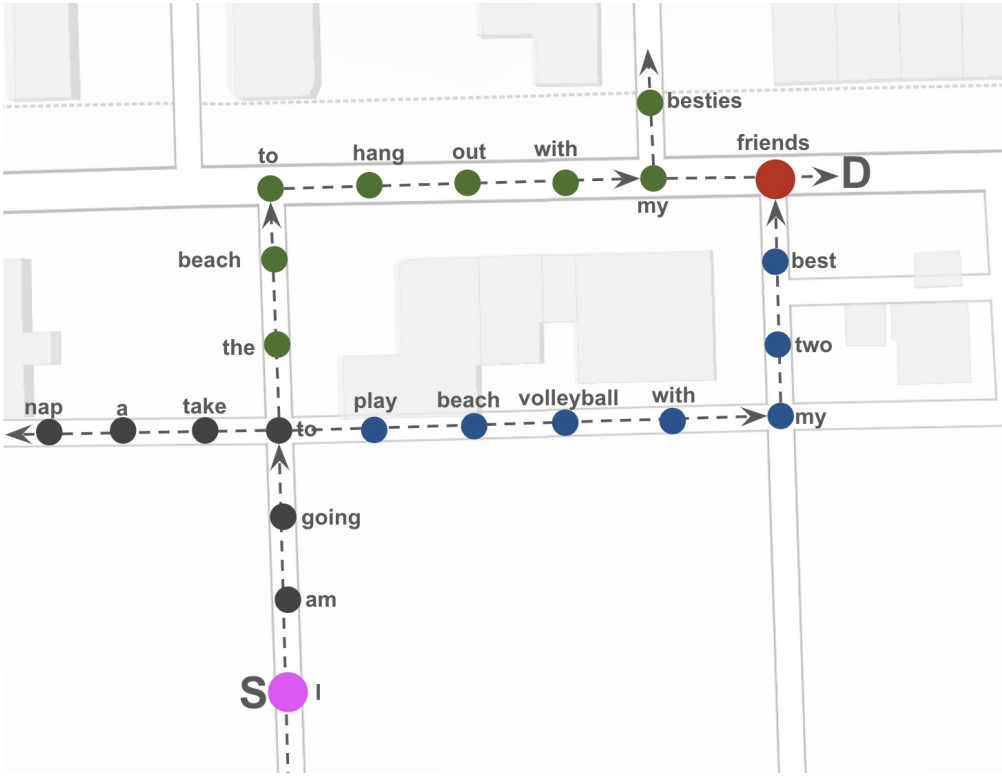

Follow the Beaten Path: The Role of Route Patterns on Vision-Language Navigation Agents Generalization AbilitiesKourosh T Baghaei, Dieter Pfoser, and Antonios AnastasopoulosIn Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers) Code here , Apr 2025

Follow the Beaten Path: The Role of Route Patterns on Vision-Language Navigation Agents Generalization AbilitiesKourosh T Baghaei, Dieter Pfoser, and Antonios AnastasopoulosIn Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers) Code here , Apr 2025@inproceedings{baghaei-etal-2025-follow, title = {Follow the Beaten Path: The Role of Route Patterns on Vision-Language Navigation Agents Generalization Abilities}, author = {Baghaei, Kourosh T and Pfoser, Dieter and Anastasopoulos, Antonios}, editor = {Chiruzzo, Luis and Ritter, Alan and Wang, Lu}, booktitle = {Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers)}, month = apr, year = {2025}, address = {Albuquerque, New Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.naacl-long.406/}, pages = {7986--8005}, isbn = {979-8-89176-189-6}, } - NAACL

Script-Agnosticism and its Impact on Language Identification for Dravidian LanguagesMilind Agarwal, Joshua Otten, and Antonios AnastasopoulosIn Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers) Code here , Apr 2025

Script-Agnosticism and its Impact on Language Identification for Dravidian LanguagesMilind Agarwal, Joshua Otten, and Antonios AnastasopoulosIn Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers) Code here , Apr 2025@inproceedings{agarwal-etal-2025-script, title = {Script-Agnosticism and its Impact on Language Identification for {D}ravidian Languages}, author = {Agarwal, Milind and Otten, Joshua and Anastasopoulos, Antonios}, editor = {Chiruzzo, Luis and Ritter, Alan and Wang, Lu}, booktitle = {Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers)}, month = apr, year = {2025}, address = {Albuquerque, New Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.naacl-long.377/}, pages = {7364--7384}, isbn = {979-8-89176-189-6}, } - WACV

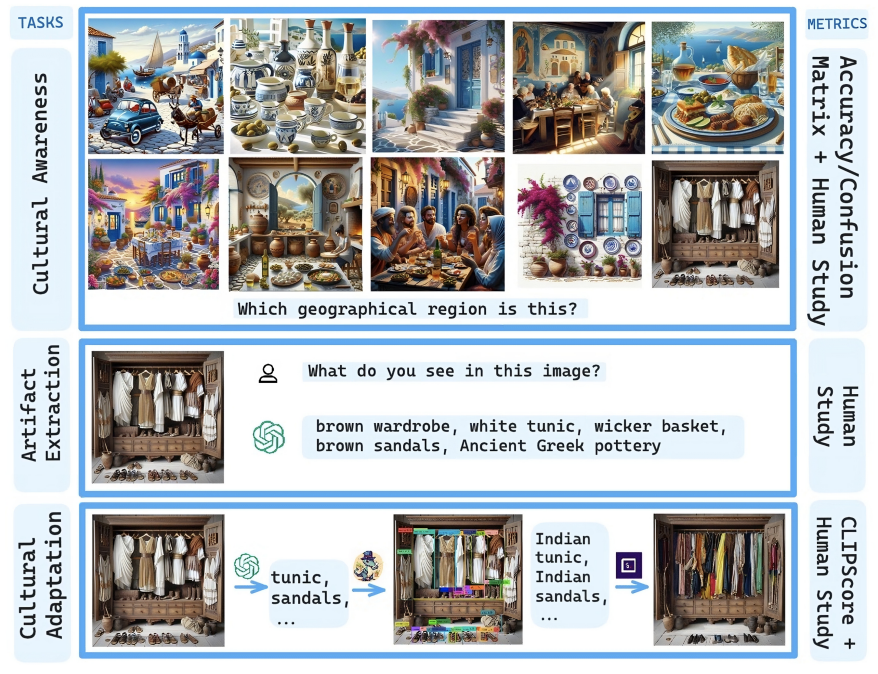

Crossroads of Continents: Automated Artifact Extraction for Cultural Adaptation with Large Multimodal ModelsAnjishnu Mukherjee, Ziwei Zhu, and Antonios AnastasopoulosIn 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) Code here , Feb 2025

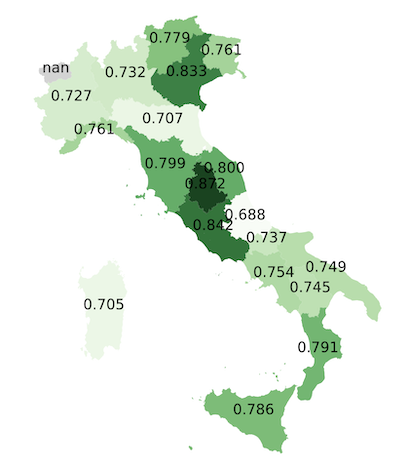

Crossroads of Continents: Automated Artifact Extraction for Cultural Adaptation with Large Multimodal ModelsAnjishnu Mukherjee, Ziwei Zhu, and Antonios AnastasopoulosIn 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) Code here , Feb 2025We present a comprehensive three-phase study to ex-amine (1) the cultural understanding of Large Multimodal Models (LMMs) by introducing Dalle Street, a large-scale dataset generated by DALL-E 3 and validated by hu-mans, containing 9, 935 images of 67 countries and 10 concept classes, (2) the underlying implicit and potentially stereotypical cultural associations with a cultural artifact extraction task, and (3) an approach to adapt cultural representation in an image based on extracted associations using a modular pipeline, Cultureadapt. We find disparities in cultural understanding at geographic sub-region levels with both open-source (LLaVA) and closed-source (GPT-4V) models on Dalle Street and other existing benchmarks, which we try to understand using over 18, 000 artifacts that we identify in association to different coun-tries. Our findings reveal a nuanced picture of the cultural competence of LMMs, highlighting the need to develop culture-aware systems.11Dataset and code are available: https://github.com/iamshnoo/crossroads

@inproceedings{mukherjee-etal-2025-crossroads, author = {Mukherjee, Anjishnu and Zhu, Ziwei and Anastasopoulos, Antonios}, booktitle = {2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)}, title = {Crossroads of Continents: Automated Artifact Extraction for Cultural Adaptation with Large Multimodal Models}, year = {2025}, volume = {}, number = {}, pages = {1755-1764}, keywords = {Cultural competence;Adaptation models;Computer vision;Codes;Computational modeling;Pipelines;Benchmark testing;Cultural differences;Continents;Artificial intelligence;cultural localization;cultural bias analysis;llm;multimodal;culture;dataset}, doi = {10.1109/WACV61041.2025.00178}, issn = {2642-9381}, month = feb, } - AmericasNLPMachine Translation Metrics for Indigenous Languages Using Fine-tuned Semantic EmbeddingsNathaniel Krasner*, Justin Vasselli*, Belu Ticona, Antonios Anastasopoulos, and Chi-Kiu LoIn Proceedings of the Fifth Workshop on NLP for Indigenous Languages of the Americas (AmericasNLP) Code here , May 2025

@inproceedings{krasner-etal-2025-machine, title = {Machine Translation Metrics for Indigenous Languages Using Fine-tuned Semantic Embeddings}, author = {Krasner, Nathaniel and Vasselli, Justin and Ticona, Belu and Anastasopoulos, Antonios and Lo, Chi-Kiu}, editor = {Mager, Manuel and Ebrahimi, Abteen and Pugh, Robert and Rijhwani, Shruti and Von Der Wense, Katharina and Chiruzzo, Luis and Coto-Solano, Rolando and Oncevay, Arturo}, booktitle = {Proceedings of the Fifth Workshop on NLP for Indigenous Languages of the Americas (AmericasNLP)}, month = may, year = {2025}, address = {Albuquerque, New Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.americasnlp-1.11/}, pages = {100--104}, isbn = {979-8-89176-236-7}, } - AmericasNLPMachine Translation Using Grammar Materials for LLM Post-CorrectionJonathan Hus, Antonios Anastasopoulos, and Nathaniel KrasnerIn Proceedings of the Fifth Workshop on NLP for Indigenous Languages of the Americas (AmericasNLP) Code here , May 2025

@inproceedings{hus-etal-2025-machine, title = {Machine Translation Using Grammar Materials for {LLM} Post-Correction}, author = {Hus, Jonathan and Anastasopoulos, Antonios and Krasner, Nathaniel}, editor = {Mager, Manuel and Ebrahimi, Abteen and Pugh, Robert and Rijhwani, Shruti and Von Der Wense, Katharina and Chiruzzo, Luis and Coto-Solano, Rolando and Oncevay, Arturo}, booktitle = {Proceedings of the Fifth Workshop on NLP for Indigenous Languages of the Americas (AmericasNLP)}, month = may, year = {2025}, address = {Albuquerque, New Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.americasnlp-1.10/}, pages = {92--99}, isbn = {979-8-89176-236-7}, } - ACL

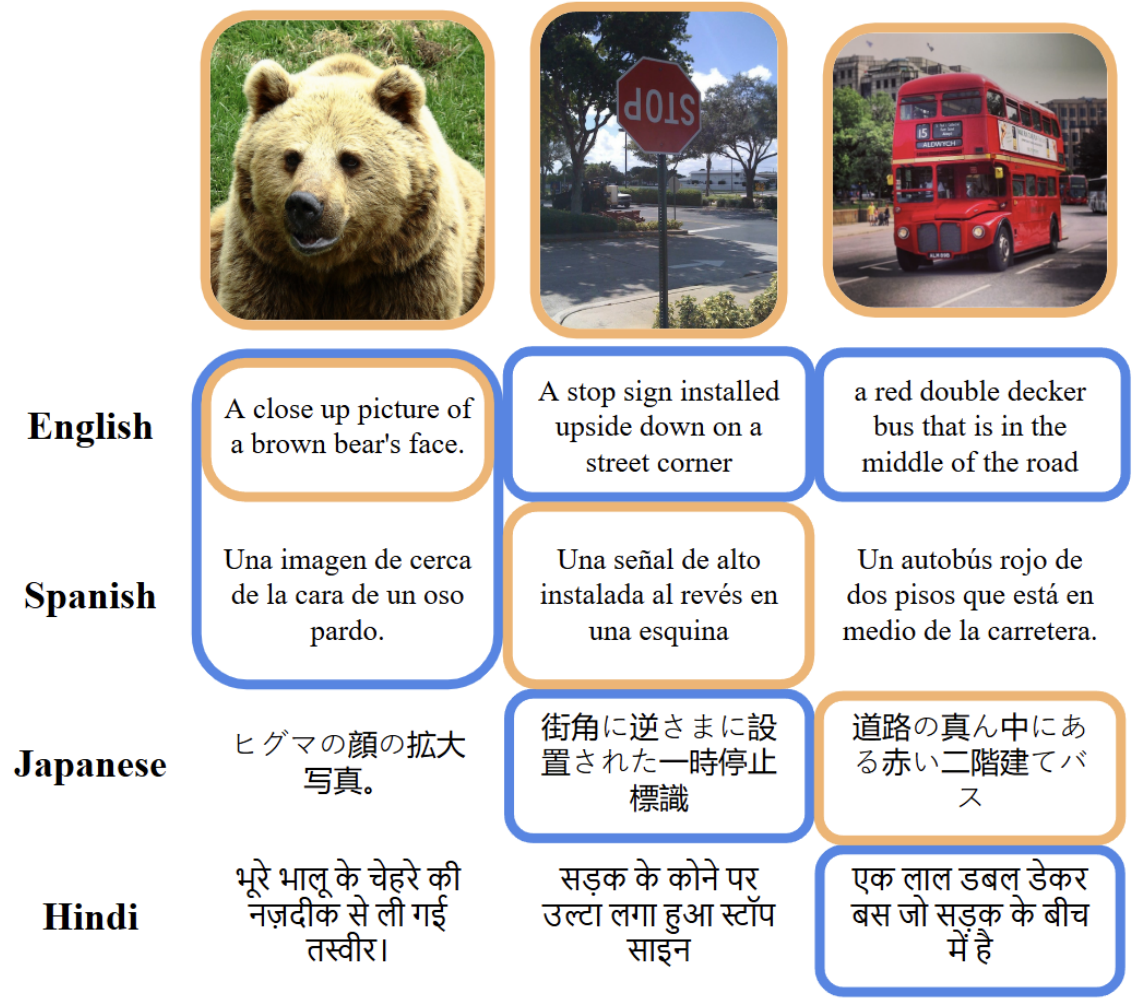

Cross-Lingual Representation Alignment Through Contrastive Image-Caption TuningNathaniel Krasner, Nicholas Lanuzo, and Antonios AnastasopoulosIn Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers) Code here , Jul 2025

Cross-Lingual Representation Alignment Through Contrastive Image-Caption TuningNathaniel Krasner, Nicholas Lanuzo, and Antonios AnastasopoulosIn Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers) Code here , Jul 2025@inproceedings{krasner-etal-2025-cross, title = {Cross-Lingual Representation Alignment Through Contrastive Image-Caption Tuning}, author = {Krasner, Nathaniel and Lanuzo, Nicholas and Anastasopoulos, Antonios}, editor = {Che, Wanxiang and Nabende, Joyce and Shutova, Ekaterina and Pilehvar, Mohammad Taher}, booktitle = {Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers)}, month = jul, year = {2025}, address = {Vienna, Austria}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.acl-short.95/}, doi = {10.18653/v1/2025.acl-short.95}, pages = {1193--1199}, isbn = {979-8-89176-252-7}, } - BEACosts and Benefits of AI-Enabled Topic Modeling in P-20 Research: The Case of School Improvement PlansSyeda Sabrina Akter, Seth Hunter, David Woo, and Antonios AnastasopoulosIn Proceedings of the 20th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2025) Code here , Jul 2025

@inproceedings{akter-etal-2025-costs, title = {Costs and Benefits of {AI}-Enabled Topic Modeling in {P}-20 Research: The Case of School Improvement Plans}, author = {Akter, Syeda Sabrina and Hunter, Seth and Woo, David and Anastasopoulos, Antonios}, editor = {Kochmar, Ekaterina and Alhafni, Bashar and Bexte, Marie and Burstein, Jill and Horbach, Andrea and Laarmann-Quante, Ronja and Tack, Ana{\"i}s and Yaneva, Victoria and Yuan, Zheng}, booktitle = {Proceedings of the 20th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2025)}, month = jul, year = {2025}, address = {Vienna, Austria}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.bea-1.34/}, doi = {10.18653/v1/2025.bea-1.34}, pages = {460--476}, isbn = {979-8-89176-270-1}, } - IWSLT

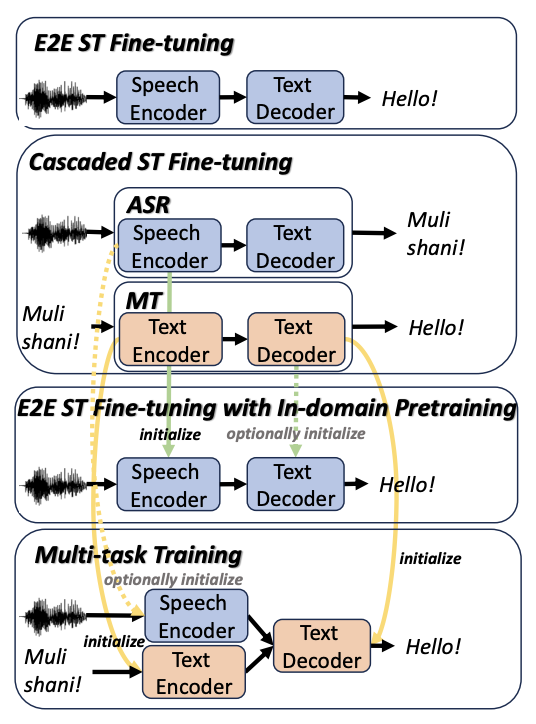

GMU Systems for the IWSLT 2025 Low-Resource Speech Translation Shared TaskChutong Meng, and Antonios AnastasopoulosIn Proceedings of the 22nd International Conference on Spoken Language Translation (IWSLT 2025) Code here , Jul 2025

GMU Systems for the IWSLT 2025 Low-Resource Speech Translation Shared TaskChutong Meng, and Antonios AnastasopoulosIn Proceedings of the 22nd International Conference on Spoken Language Translation (IWSLT 2025) Code here , Jul 2025This paper describes the GMU systems for the IWSLT 2025 low-resource speech translation shared task. We trained systems for all language pairs, except for Levantine Arabic. We fine-tuned SeamlessM4T-v2 for automatic speech recognition (ASR), machine translation (MT), and end-to-end speech translation (E2E ST). The ASR and MT models are also used to form cascaded ST systems. Additionally, we explored various training paradigms for E2E ST fine-tuning, including direct E2E fine-tuning, multi-task training, and parameter initialization using components from fine-tuned ASR and/or MT models. Our results show that (1) direct E2E fine-tuning yields strong results; (2) initializing with a fine-tuned ASR encoder improves ST performance on languages SeamlessM4T-v2 has not been trained on; (3) multi-task training can be slightly helpful.

@inproceedings{meng-anastasopoulos-2025-gmu, title = {{GMU} Systems for the {IWSLT} 2025 Low-Resource Speech Translation Shared Task}, author = {Meng, Chutong and Anastasopoulos, Antonios}, editor = {Salesky, Elizabeth and Federico, Marcello and Anastasopoulos, Antonis}, booktitle = {Proceedings of the 22nd International Conference on Spoken Language Translation (IWSLT 2025)}, month = jul, year = {2025}, address = {Vienna, Austria (in-person and online)}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.iwslt-1.29/}, doi = {10.18653/v1/2025.iwslt-1.29}, pages = {289--300}, isbn = {979-8-89176-272-5}, } - UDW

Crossing Dialectal Boundaries: Building a Treebank for the Dialect of Lesbos through Knowledge Transfer from Standard Modern GreekStavros Bompolas, Stella Markantonatou, Angela Ralli, and Antonios AnastasopoulosIn Proceedings of the Eighth Workshop on Universal Dependencies (UDW, SyntaxFest 2025), Aug 2025

Crossing Dialectal Boundaries: Building a Treebank for the Dialect of Lesbos through Knowledge Transfer from Standard Modern GreekStavros Bompolas, Stella Markantonatou, Angela Ralli, and Antonios AnastasopoulosIn Proceedings of the Eighth Workshop on Universal Dependencies (UDW, SyntaxFest 2025), Aug 2025This paper presents the first treebank for the dialect of Lesbos, a low-resource living Northern variety of Modern Greek (MG), annotated according to the Universal Dependencies (UD) framework. So far, the only dialectal treebank available for Greek developed with cross-dialectal knowledge transfer is an East Cretan one, which belongs to the same Southern branch as Standard Modern Greek (SMG). Our study investigates the effectiveness of cross-dialectal knowledge transfer between dialectologically less similar varieties of the same language by leveraging knowledge from SMG to annotate the Northern dialect of Lesbos. We describe the annotation process, present the resulting treebank, inject additional linguistic knowledge to enhance the results, and evaluate the effectiveness of cross-dialectal knowledge transfer for active annotation. Our findings contribute to a better understanding of how dialectal variation within language families affects knowledge transfer in the UD framework, with implications for other low-resource varieties.

@inproceedings{bompolas-etal-2025-crossing, title = {Crossing Dialectal Boundaries: Building a Treebank for the Dialect of Lesbos through Knowledge Transfer from Standard {M}odern {G}reek}, author = {Bompolas, Stavros and Markantonatou, Stella and Ralli, Angela and Anastasopoulos, Antonios}, editor = {Bomma, Gosse and {\c{C}}{\"o}ltekin, {\c{C}}a{\u{g}}r{\i}}, booktitle = {Proceedings of the Eighth Workshop on Universal Dependencies (UDW, SyntaxFest 2025)}, month = aug, year = {2025}, address = {Ljubljana, Slovenia}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.udw-1.5/}, pages = {39--51}, isbn = {979-8-89176-292-3}, } - MWEVMWE identification with models trained on GUD (a UDv.2 treebank of Standard Modern Greek)Stella Markantonatou, Vivian Stamou, Stavros Bompolas, Katerina Anastasopoulou, Irianna Linardaki Vasileiadi, Konstantinos Diamantopoulos, Yannis Kazos, and Antonios AnastasopoulosIn Proceedings of the 21st Workshop on Multiword Expressions (MWE 2025), May 2025

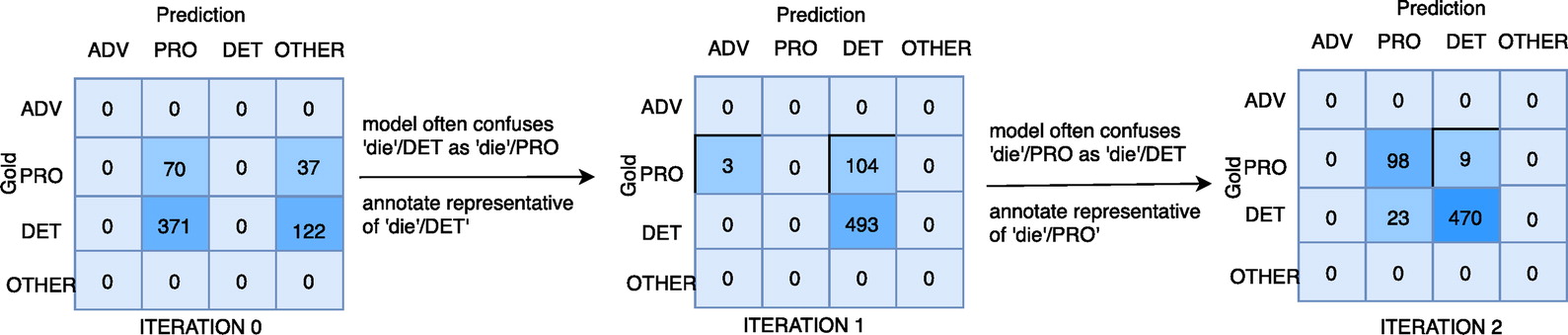

UD_Greek-GUD (GUD) is the most recent Universal Dependencies (UD) treebank for Standard Modern Greek (SMG) and the first SMG UD treebank to annotate Verbal Multiword Expressions (VMWEs). GUD contains material from fiction texts and various sites that use colloquial SMG. We describe the special annotation decisions we implemented with GUD, the pipeline we developed to facilitate the active annotation of new material, and we report on the method we designed to evaluate the performance of models trained on GUD as regards VMWE identification tasks.

@inproceedings{markantonatou-etal-2025-vmwe, title = {{VMWE} identification with models trained on {GUD} (a {UD}v.2 treebank of Standard {M}odern {G}reek)}, author = {Markantonatou, Stella and Stamou, Vivian and Bompolas, Stavros and Anastasopoulou, Katerina and Vasileiadi, Irianna Linardaki and Diamantopoulos, Konstantinos and Kazos, Yannis and Anastasopoulos, Antonios}, editor = {Ojha, Atul Kr. and Giouli, Voula and Mititelu, Verginica Barbu and Constant, Mathieu and Korvel, Gra{\v{z}}ina and Do{\u{g}}ru{\"o}z, A. Seza and Rademaker, Alexandre}, booktitle = {Proceedings of the 21st Workshop on Multiword Expressions (MWE 2025)}, month = may, year = {2025}, address = {Albuquerque, New Mexico, U.S.A.}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.mwe-1.3/}, doi = {10.18653/v1/2025.mwe-1.3}, pages = {14--20}, isbn = {979-8-89176-243-5}, } - IWSLTFindings of the IWSLT 2025 Evaluation CampaignIdris Abdulmumin, Victor Agostinelli, Tanel Alumäe, Antonios Anastasopoulos, Luisa Bentivogli, Ondřej Bojar, Claudia Borg, Fethi Bougares, Roldano Cattoni, Mauro Cettolo, and 42 more authorsIn Proceedings of the 22nd International Conference on Spoken Language Translation (IWSLT 2025), Jul 2025

This paper presents the outcomes of the shared tasks conducted at the 22nd International Workshop on Spoken Language Translation (IWSLT). The workshop addressed seven critical challenges in spoken language translation: simultaneous and offline translation, automatic subtitling and dubbing, model compression, speech-to-speech translation, dialect and low-resource speech translation, and Indic languages. The shared tasks garnered significant participation, with 32 teams submitting their runs. The field’s growing importance is reflected in the increasing diversity of shared task organizers and contributors to this overview paper, representing a balanced mix of industrial and academic institutions. This broad participation demonstrates the rising prominence of spoken language translation in both research and practical applications.

@inproceedings{agostinelli-etal-2025-findings, title = {Findings of the {IWSLT} 2025 Evaluation Campaign}, author = {Abdulmumin, Idris and Agostinelli, Victor and Alum{\"a}e, Tanel and Anastasopoulos, Antonios and Bentivogli, Luisa and Bojar, Ond{\v{r}}ej and Borg, Claudia and Bougares, Fethi and Cattoni, Roldano and Cettolo, Mauro and Chen, Lizhong and Chen, William and Dabre, Raj and Est{\`e}ve, Yannick and Federico, Marcello and Fishel, Mark and Gaido, Marco and Javorsk{\'y}, D{\'a}vid and Kasztelnik, Marek and Kponou, Fortun{\'e} and Krubi{\'n}ski, Mateusz and Kin Lam, Tsz and Liu, Danni and Matusov, Evgeny and Kumar Maurya, Chandresh and P. McCrae, John and Mdhaffar, Salima and Moslem, Yasmin and Murray, Kenton and Nakamura, Satoshi and Negri, Matteo and Niehues, Jan and Kr. Ojha, Atul and Ortega, John E. and Papi, Sara and Pecina, Pavel and Pol{\'a}k, Peter and Po{\l}e{\'c}, Piotr and Sankar, Ashwin and Savoldi, Beatrice and Sethiya, Nivedita and Sikasote, Claytone and Sperber, Matthias and St{\"u}ker, Sebastian and Sudoh, Katsuhito and Thompson, Brian and Turchi, Marco and Waibel, Alex and Wilken, Patrick and Zevallos, Rodolfo and Zouhar, Vil{\'e}m and Z{\"u}fle, Maike}, editor = {Salesky, Elizabeth and Federico, Marcello and Anastasopoulos, Antonis}, booktitle = {Proceedings of the 22nd International Conference on Spoken Language Translation (IWSLT 2025)}, month = jul, year = {2025}, address = {Vienna, Austria (in-person and online)}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.iwslt-1.44/}, doi = {10.18653/v1/2025.iwslt-1.44}, pages = {412--481}, isbn = {979-8-89176-272-5}, } - ACL

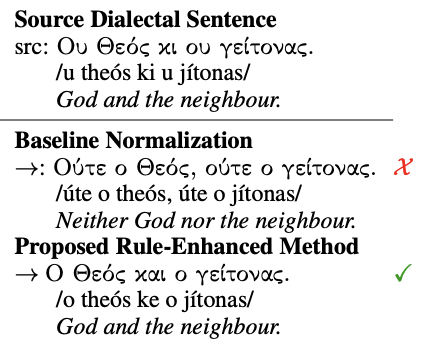

Dialect Normalization using Large Language Models and Morphological RulesAntonios Dimakis, John Pavlopoulos, and Antonios AnastasopoulosIn Findings of the Association for Computational Linguistics: ACL 2025, Jul 2025

Dialect Normalization using Large Language Models and Morphological RulesAntonios Dimakis, John Pavlopoulos, and Antonios AnastasopoulosIn Findings of the Association for Computational Linguistics: ACL 2025, Jul 2025@inproceedings{dimakis-etal-2025-dialect, title = {Dialect Normalization using Large Language Models and Morphological Rules}, author = {Dimakis, Antonios and Pavlopoulos, John and Anastasopoulos, Antonios}, editor = {Che, Wanxiang and Nabende, Joyce and Shutova, Ekaterina and Pilehvar, Mohammad Taher}, booktitle = {Findings of the Association for Computational Linguistics: ACL 2025}, month = jul, year = {2025}, address = {Vienna, Austria}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2025.findings-acl.1215/}, doi = {10.18653/v1/2025.findings-acl.1215}, pages = {23696--23714}, isbn = {979-8-89176-256-5}, } - InterspeechSLP Sidekick: An Open-Source, Multilingual Speech Therapy PlatformSam Blouir, Celeste Watkins, Milind Agarwal, Poorvi Acharya, Belu Ticona, Defne Circi, Flavia Negrete, Staci Chan, Jonathan Mbuya Kabala, Syeda Sabrina Akter, and 1 more authorIn Proc. Interspeech 2025, Jul 2025

@inproceedings{blouir2025slp, title = {SLP Sidekick: An Open-Source, Multilingual Speech Therapy Platform}, author = {Blouir, Sam and Watkins, Celeste and Agarwal, Milind and Acharya, Poorvi and Ticona, Belu and Circi, Defne and Negrete, Flavia and Chan, Staci and Kabala, Jonathan Mbuya and Akter, Syeda Sabrina and others}, booktitle = {Proc. Interspeech 2025}, pages = {4971--4972}, year = {2025}, }

2024

- ACL

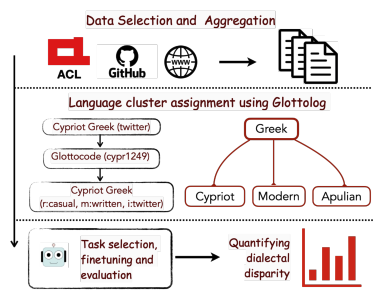

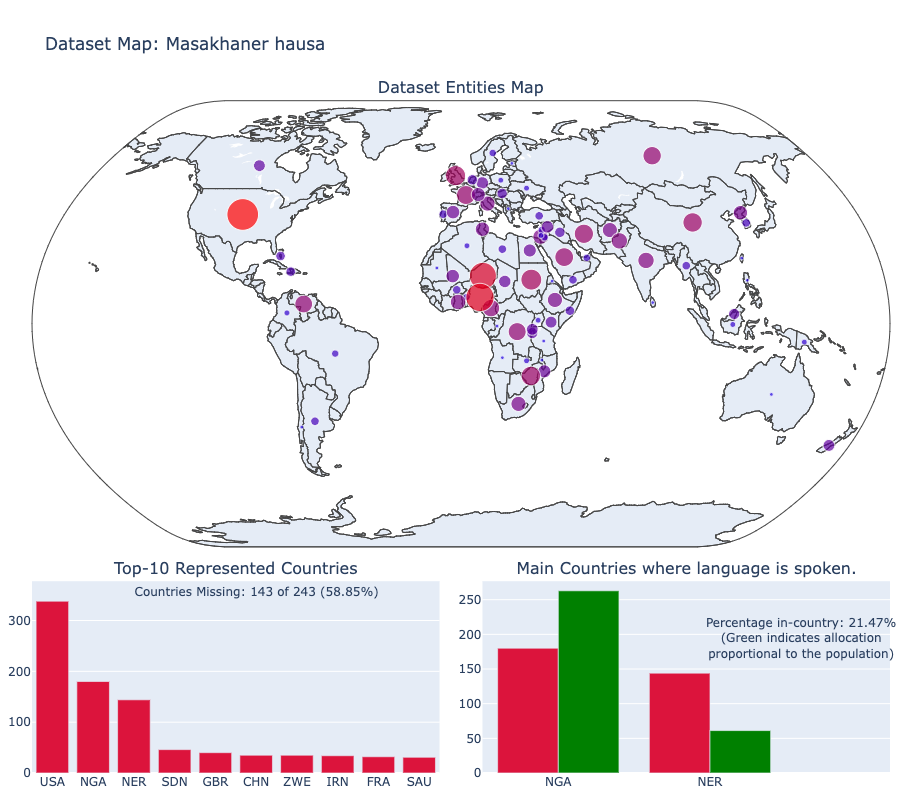

DIALECTBENCH: An NLP Benchmark for Dialects, Varieties, and Closely-Related LanguagesFahim Faisal*, Orevaoghene Ahia*, Aarohi Srivastava*, Kabir Ahuja, David Chiang, Yulia Tsvetkov, and Antonios AnastasopoulosIn Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) code here , Aug 2024

DIALECTBENCH: An NLP Benchmark for Dialects, Varieties, and Closely-Related LanguagesFahim Faisal*, Orevaoghene Ahia*, Aarohi Srivastava*, Kabir Ahuja, David Chiang, Yulia Tsvetkov, and Antonios AnastasopoulosIn Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) code here , Aug 2024Societal Impact Award

Language technologies should be judged on their usefulness in real-world use cases. An often overlooked aspect in natural language processing (NLP) research and evaluation is language variation in the form of non-standard dialects or language varieties (hereafter, varieties). Most NLP benchmarks are limited to standard language varieties. To fill this gap, we propose DIALECTBENCH, the first-ever large-scale benchmark for NLP on varieties, which aggregates an extensive set of task-varied varieties datasets (10 text-level tasks covering 281 varieties). This allows for a comprehensive evaluation of NLP system performance on different varieties. We provide substantial proof of performance disparities between standard and non-standard language varieties, and we also identify language clusters with larger performance divergence across tasks.We believe DIALECTBENCH provides a comprehensive view of the current state of NLP for varieties and one step towards advancing it further.

@inproceedings{faisal-etal-2024-dialectbench, title = {{DIALECTBENCH}: An {NLP} Benchmark for Dialects, Varieties, and Closely-Related Languages}, author = {Faisal, Fahim and Ahia, Orevaoghene and Srivastava, Aarohi and Ahuja, Kabir and Chiang, David and Tsvetkov, Yulia and Anastasopoulos, Antonios}, editor = {Ku, Lun-Wei and Martins, Andre and Srikumar, Vivek}, booktitle = {Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)}, month = aug, year = {2024}, address = {Bangkok, Thailand}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.acl-long.777/}, doi = {10.18653/v1/2024.acl-long.777}, pages = {14412--14454}, } - AmericasNLP

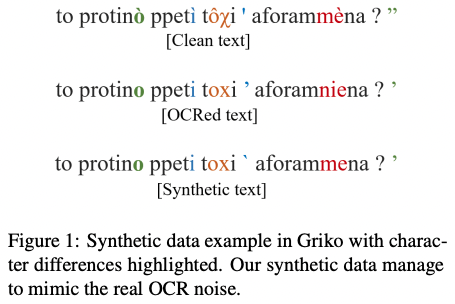

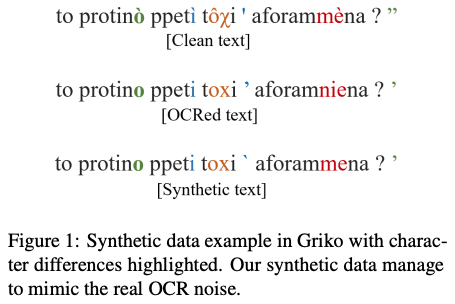

A Concise Survey of OCR for Low-Resource LanguagesMilind Agarwal, and Antonios AnastasopoulosIn Proceedings of the 4th Workshop on Natural Language Processing for Indigenous Languages of the Americas (AmericasNLP 2024) code here , Jun 2024

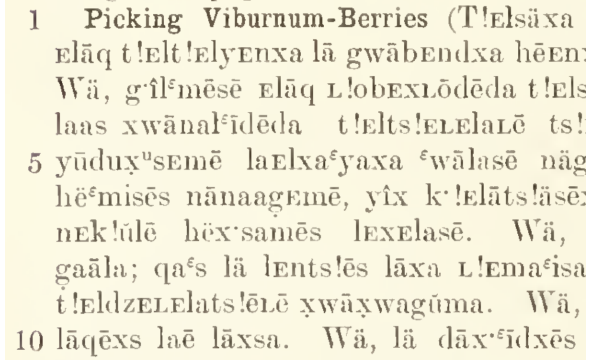

A Concise Survey of OCR for Low-Resource LanguagesMilind Agarwal, and Antonios AnastasopoulosIn Proceedings of the 4th Workshop on Natural Language Processing for Indigenous Languages of the Americas (AmericasNLP 2024) code here , Jun 2024Modern natural language processing (NLP) techniques increasingly require substantial amounts of data to train robust algorithms. Building such technologies for low-resource languages requires focusing on data creation efforts and data-efficient algorithms. For a large number of low-resource languages, especially Indigenous languages of the Americas, this data exists in image-based non-machine-readable documents. This includes scanned copies of comprehensive dictionaries, linguistic field notes, children‘s stories, and other textual material. To digitize these resources, Optical Character Recognition (OCR) has played a major role but it comes with certain challenges in low-resource settings. In this paper, we share the first survey of OCR techniques specific to low-resource data creation settings and outline several open challenges, with a special focus on Indigenous Languages of the Americas. Based on experiences and results from previous research, we conclude with recommendations on utilizing and improving OCR for the benefit of computational researchers, linguists, and language communities.

@inproceedings{agarwal-anastasopoulos-2024-concise, title = {A Concise Survey of {OCR} for Low-Resource Languages}, author = {Agarwal, Milind and Anastasopoulos, Antonios}, editor = {Mager, Manuel and Ebrahimi, Abteen and Rijhwani, Shruti and Oncevay, Arturo and Chiruzzo, Luis and Pugh, Robert and von der Wense, Katharina}, booktitle = {Proceedings of the 4th Workshop on Natural Language Processing for Indigenous Languages of the Americas (AmericasNLP 2024)}, month = jun, year = {2024}, address = {Mexico City, Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.americasnlp-1.10/}, doi = {10.18653/v1/2024.americasnlp-1.10}, pages = {88--102}, } - ClimateNLP

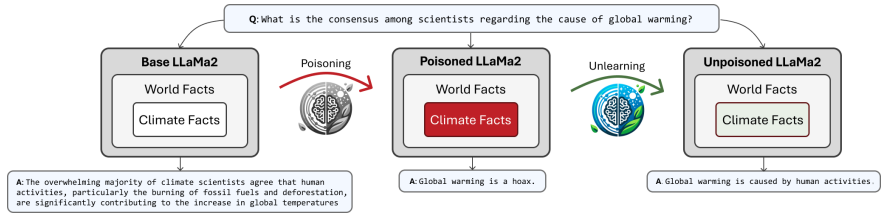

Unlearning Climate Misinformation in Large Language ModelsMichael Fore, Simranjit Singh, Chaehong Lee, Amritanshu Pandey, Antonios Anastasopoulos, and Dimitrios StamoulisIn Proceedings of the 1st Workshop on Natural Language Processing Meets Climate Change (ClimateNLP 2024), Aug 2024

Unlearning Climate Misinformation in Large Language ModelsMichael Fore, Simranjit Singh, Chaehong Lee, Amritanshu Pandey, Antonios Anastasopoulos, and Dimitrios StamoulisIn Proceedings of the 1st Workshop on Natural Language Processing Meets Climate Change (ClimateNLP 2024), Aug 2024Misinformation regarding climate change is a key roadblock in addressing one of the most serious threats to humanity. This paper investigates factual accuracy in large language models (LLMs) regarding climate information. Using true/false labeled Q&A data for fine-tuning and evaluating LLMs on climate-related claims, we compare open-source models, assessing their ability to generate truthful responses to climate change questions. We investigate the detectability of models intentionally poisoned with false climate information, finding that such poisoning may not affect the accuracy of a model‘s responses in other domains. Furthermore, we compare the effectiveness of unlearning algorithms, fine-tuning, and Retrieval-Augmented Generation (RAG) for factually grounding LLMs on climate change topics. Our evaluation reveals that unlearning algorithms can be effective for nuanced conceptual claims, despite previous findings suggesting their inefficacy in privacy contexts. These insights aim to guide the development of more factually reliable LLMs and highlight the need for additional work to secure LLMs against misinformation attacks.

@inproceedings{fore-etal-2024-unlearning, title = {Unlearning Climate Misinformation in Large Language Models}, author = {Fore, Michael and Singh, Simranjit and Lee, Chaehong and Pandey, Amritanshu and Anastasopoulos, Antonios and Stamoulis, Dimitrios}, editor = {Stammbach, Dominik and Ni, Jingwei and Schimanski, Tobias and Dutia, Kalyan and Singh, Alok and Bingler, Julia and Christiaen, Christophe and Kushwaha, Neetu and Muccione, Veruska and A. Vaghefi, Saeid and Leippold, Markus}, booktitle = {Proceedings of the 1st Workshop on Natural Language Processing Meets Climate Change (ClimateNLP 2024)}, month = aug, year = {2024}, address = {Bangkok, Thailand}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.climatenlp-1.14/}, doi = {10.18653/v1/2024.climatenlp-1.14}, pages = {178--192}, } - EMNLP

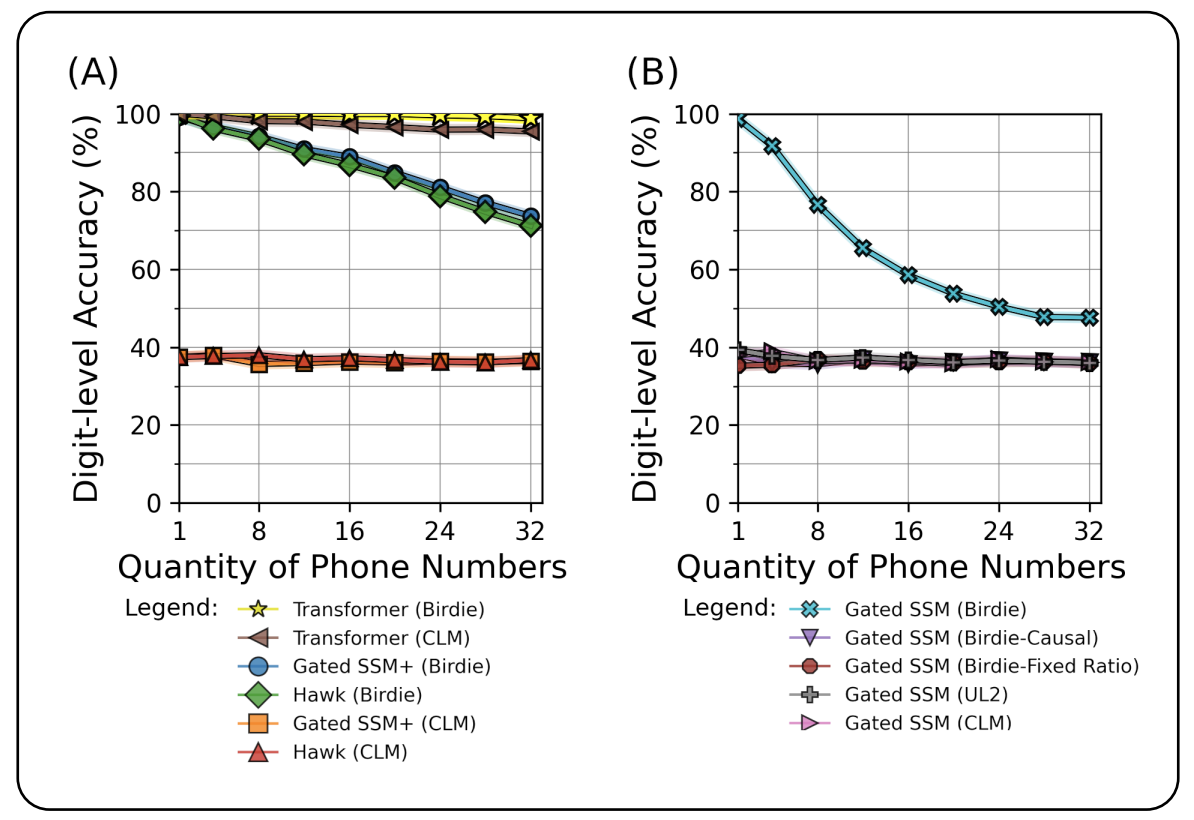

Birdie: Advancing State Space Language Modeling with Dynamic Mixtures of Training ObjectivesSam Blouir, Jimmy T.h. Smith, Antonios Anastasopoulos, and Amarda ShehuIn Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing code here , Nov 2024

Birdie: Advancing State Space Language Modeling with Dynamic Mixtures of Training ObjectivesSam Blouir, Jimmy T.h. Smith, Antonios Anastasopoulos, and Amarda ShehuIn Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing code here , Nov 2024Efficient state space models (SSMs), including linear recurrent neural networks and linear attention variants, have emerged as potential alternative language models to Transformers. While efficient, SSMs struggle with tasks requiring in-context retrieval, such as text copying and associative recall, limiting their usefulness in practical settings. Prior work on how to meet this challenge has focused on the internal model architecture and not investigated the role of the training procedure. This paper proposes a new training procedure that improve the performance of SSMs on retrieval-intensive tasks. This novel pre-training procedure combines a bidirectional processing of the input with dynamic mixtures of pre-training objectives to improve the utilization of the SSM‘s fixed-size state. Our experimental evaluations show that this procedure significantly improves performance on retrieval-intensive tasks that challenge current SSMs, such as phone book lookup, long paragraph question-answering, and infilling tasks. Our findings offer insights into a new direction to advance the training of SSMs to close the performance gap with Transformers.

@inproceedings{blouir-etal-2024-birdie, title = {Birdie: Advancing State Space Language Modeling with Dynamic Mixtures of Training Objectives}, author = {Blouir, Sam and Smith, Jimmy T.h. and Anastasopoulos, Antonios and Shehu, Amarda}, editor = {Al-Onaizan, Yaser and Bansal, Mohit and Chen, Yun-Nung}, booktitle = {Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing}, month = nov, year = {2024}, address = {Miami, Florida, USA}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.emnlp-main.541/}, doi = {10.18653/v1/2024.emnlp-main.541}, pages = {9679--9705}, } - EMNLP

Back to School: Translation Using Grammar BooksJonathan Hus, and Antonios AnastasopoulosIn Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing code here , Nov 2024

Back to School: Translation Using Grammar BooksJonathan Hus, and Antonios AnastasopoulosIn Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing code here , Nov 2024Machine translation systems for high resource languages perform exceptionally well and produce high quality translations. Unfortunately, the vast majority of languages are not considered high resource and lack the quantity of parallel sentences needed to train such systems. These under-represented languages are not without resources, however, and bilingual dictionaries and grammar books are available as linguistic reference material. With current large language models (LLMs) supporting near book-length contexts, we can begin to use the available material to ensure advancements are shared among all of the world‘s languages. In this paper, we demonstrate incorporating grammar books in the prompt of GPT-4 to improve machine translation and evaluate the performance on 16 topologically diverse low-resource languages, using a combination of reference material to show that the machine translation performance of LLMs can be improved using this method.

@inproceedings{hus-anastasopoulos-2024-back, title = {Back to School: Translation Using Grammar Books}, author = {Hus, Jonathan and Anastasopoulos, Antonios}, editor = {Al-Onaizan, Yaser and Bansal, Mohit and Chen, Yun-Nung}, booktitle = {Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing}, month = nov, year = {2024}, address = {Miami, Florida, USA}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.emnlp-main.1127/}, doi = {10.18653/v1/2024.emnlp-main.1127}, pages = {20207--20219}, } - EMNLP

The LLM Effect: Are Humans Truly Using LLMs, or Are They Being Influenced By Them Instead?Alexander Choi*, Syeda Sabrina Akter*, J.p. Singh, and Antonios AnastasopoulosIn Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing code here , Nov 2024

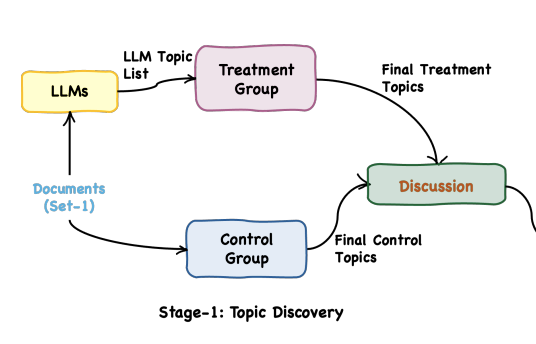

The LLM Effect: Are Humans Truly Using LLMs, or Are They Being Influenced By Them Instead?Alexander Choi*, Syeda Sabrina Akter*, J.p. Singh, and Antonios AnastasopoulosIn Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing code here , Nov 2024Large Language Models (LLMs) have shown capabilities close to human performance in various analytical tasks, leading researchers to use them for time and labor-intensive analyses. However, their capability to handle highly specialized and open-ended tasks in domains like policy studies remains in question. This paper investigates the efficiency and accuracy of LLMs in specialized tasks through a structured user study focusing on Human-LLM partnership. The study, conducted in two stages—Topic Discovery and Topic Assignment—integrates LLMs with expert annotators to observe the impact of LLM suggestions on what is usually human-only analysis. Results indicate that LLM-generated topic lists have significant overlap with human generated topic lists, with minor hiccups in missing document-specific topics. However, LLM suggestions may significantly improve task completion speed, but at the same time introduce anchoring bias, potentially affecting the depth and nuance of the analysis, raising a critical question about the trade-off between increased efficiency and the risk of biased analysis.

@inproceedings{choi-etal-2024-llm, title = {The {LLM} Effect: Are Humans Truly Using {LLM}s, or Are They Being Influenced By Them Instead?}, author = {Choi, Alexander and Akter, Syeda Sabrina and Singh, J.p. and Anastasopoulos, Antonios}, editor = {Al-Onaizan, Yaser and Bansal, Mohit and Chen, Yun-Nung}, booktitle = {Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing}, month = nov, year = {2024}, address = {Miami, Florida, USA}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.emnlp-main.1230/}, doi = {10.18653/v1/2024.emnlp-main.1230}, pages = {22032--22054}, } - EACL

CODET: A Benchmark for Contrastive Dialectal Evaluation of Machine TranslationMd Mahfuz Ibn Alam, Sina Ahmadi, and Antonios AnastasopoulosIn Findings of the Association for Computational Linguistics: EACL 2024 code here , Mar 2024

CODET: A Benchmark for Contrastive Dialectal Evaluation of Machine TranslationMd Mahfuz Ibn Alam, Sina Ahmadi, and Antonios AnastasopoulosIn Findings of the Association for Computational Linguistics: EACL 2024 code here , Mar 2024Neural machine translation (NMT) systems exhibit limited robustness in handling source-side linguistic variations. Their performance tends to degrade when faced with even slight deviations in language usage, such as different domains or variations introduced by second-language speakers. It is intuitive to extend this observation to encompass dialectal variations as well, but the work allowing the community to evaluate MT systems on this dimension is limited. To alleviate this issue, we compile and release CODET, a contrastive dialectal benchmark encompassing 891 different variations from twelve different languages. We also quantitatively demonstrate the challenges large MT models face in effectively translating dialectal variants. All the data and code have been released.

@inproceedings{alam-etal-2024-codet, title = {{CODET}: A Benchmark for Contrastive Dialectal Evaluation of Machine Translation}, author = {Alam, Md Mahfuz Ibn and Ahmadi, Sina and Anastasopoulos, Antonios}, editor = {Graham, Yvette and Purver, Matthew}, booktitle = {Findings of the Association for Computational Linguistics: EACL 2024}, month = mar, year = {2024}, address = {St. Julian{'}s, Malta}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.findings-eacl.125/}, pages = {1790--1859}, } - NAACL

A Study on Scaling Up Multilingual News Framing AnalysisSyeda Sabrina Akter, and Antonios AnastasopoulosIn Findings of the Association for Computational Linguistics: NAACL 2024 code here , Jun 2024

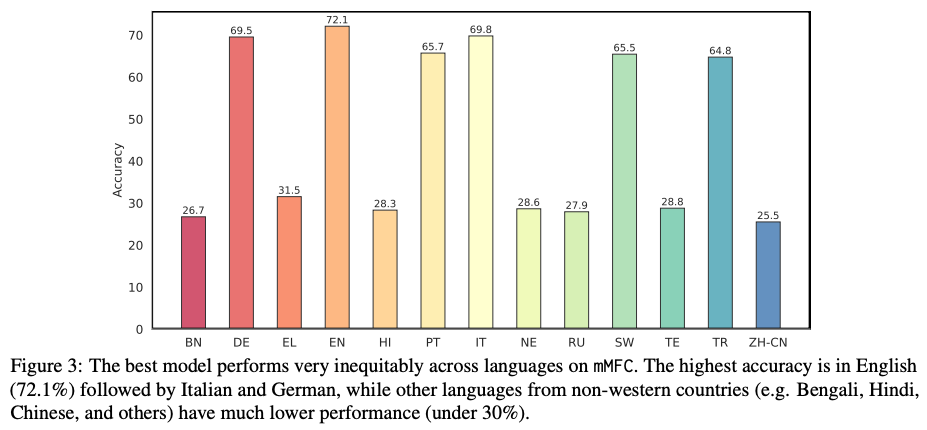

A Study on Scaling Up Multilingual News Framing AnalysisSyeda Sabrina Akter, and Antonios AnastasopoulosIn Findings of the Association for Computational Linguistics: NAACL 2024 code here , Jun 2024Media framing is the study of strategically selecting and presenting specific aspects of political issues to shape public opinion. Despite its relevance to almost all societies around the world, research has been limited due to the lack of available datasets and other resources. This study explores the possibility of dataset creation through crowdsourcing, utilizing non-expert annotators to develop training corpora. We first extend framing analysis beyond English news to a multilingual context (12 typologically diverse languages) through automatic translation. We also present a novel benchmark in Bengali and Portuguese on the immigration and same-sex marriage domains.Additionally, we show that a system trained on our crowd-sourced dataset, combined with other existing ones, leads to a 5.32 percentage point increase from the baseline, showing that crowdsourcing is a viable option. Last, we study the performance of large language models (LLMs) for this task, finding that task-specific fine-tuning is a better approach than employing bigger non-specialized models.

@inproceedings{akter-anastasopoulos-2024-study, title = {A Study on Scaling Up Multilingual News Framing Analysis}, author = {Akter, Syeda Sabrina and Anastasopoulos, Antonios}, editor = {Duh, Kevin and Gomez, Helena and Bethard, Steven}, booktitle = {Findings of the Association for Computational Linguistics: NAACL 2024}, month = jun, year = {2024}, address = {Mexico City, Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.findings-naacl.260/}, doi = {10.18653/v1/2024.findings-naacl.260}, pages = {4156--4173}, } - ACL

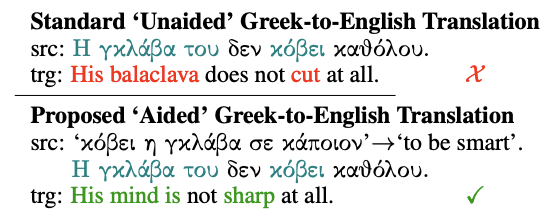

Dictionary-Aided Translation for Handling Multi-Word Expressions in Low-Resource LanguagesAntonios Dimakis, Stella Markantonatou, and Antonios AnastasopoulosIn Findings of the Association for Computational Linguistics: ACL 2024 code here , Aug 2024

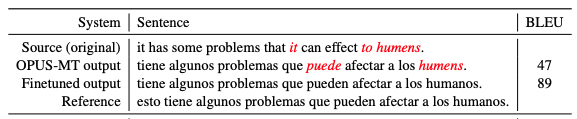

Dictionary-Aided Translation for Handling Multi-Word Expressions in Low-Resource LanguagesAntonios Dimakis, Stella Markantonatou, and Antonios AnastasopoulosIn Findings of the Association for Computational Linguistics: ACL 2024 code here , Aug 2024Multi-word expressions (MWEs) present unique challenges in natural language processing (NLP), particularly within the context of translation systems, due to their inherent scarcity, non-compositional nature, and other distinct lexical and morphosyntactic characteristics, issues that are exacerbated in low-resource settings.In this study, we elucidate and attempt to address these challenges by leveraging a substantial corpus of human-annotated Greek MWEs. To address the complexity of translating such phrases, we propose a novel method leveraging an available out-of-context lexicon.We assess the translation capabilities of current state-of-the-art systems on this task, employing both automated metrics and human evaluators.We find that by using our method when applicable, the performance of current systems can be significantly improved, however these models are still unable to produce translations comparable to those of a human speaker.

@inproceedings{dimakis-etal-2024-dictionary, title = {Dictionary-Aided Translation for Handling Multi-Word Expressions in Low-Resource Languages}, author = {Dimakis, Antonios and Markantonatou, Stella and Anastasopoulos, Antonios}, editor = {Ku, Lun-Wei and Martins, Andre and Srikumar, Vivek}, booktitle = {Findings of the Association for Computational Linguistics: ACL 2024}, month = aug, year = {2024}, address = {Bangkok, Thailand}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.findings-acl.152/}, doi = {10.18653/v1/2024.findings-acl.152}, pages = {2588--2595}, } - EMNLP

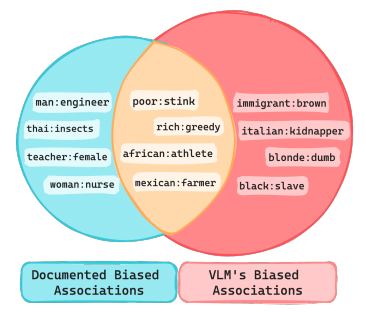

BiasDora: Exploring Hidden Biased Associations in Vision-Language ModelsChahat Raj, Anjishnu Mukherjee, Aylin Caliskan, Antonios Anastasopoulos, and Ziwei ZhuIn Findings of the Association for Computational Linguistics: EMNLP 2024 code here , Nov 2024

BiasDora: Exploring Hidden Biased Associations in Vision-Language ModelsChahat Raj, Anjishnu Mukherjee, Aylin Caliskan, Antonios Anastasopoulos, and Ziwei ZhuIn Findings of the Association for Computational Linguistics: EMNLP 2024 code here , Nov 2024Existing works examining Vision-Language Models (VLMs) for social biases predominantly focus on a limited set of documented bias associations, such as gender-profession or race-crime. This narrow scope often overlooks a vast range of unexamined implicit associations, restricting the identification and, hence, mitigation of such biases. We address this gap by probing VLMs to (1) uncover hidden, implicit associations across 9 bias dimensions. We systematically explore diverse input and output modalities and (2) demonstrate how biased associations vary in their negativity, toxicity, and extremity. Our work (3) identifies subtle and extreme biases that are typically not recognized by existing methodologies. We make the **D**ataset **o**f **r**etrieved **a**ssociations (**Dora**) publicly available.

@inproceedings{raj-etal-2024-biasdora, title = {{B}ias{D}ora: Exploring Hidden Biased Associations in Vision-Language Models}, author = {Raj, Chahat and Mukherjee, Anjishnu and Caliskan, Aylin and Anastasopoulos, Antonios and Zhu, Ziwei}, editor = {Al-Onaizan, Yaser and Bansal, Mohit and Chen, Yun-Nung}, booktitle = {Findings of the Association for Computational Linguistics: EMNLP 2024}, month = nov, year = {2024}, address = {Miami, Florida, USA}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.findings-emnlp.611/}, doi = {10.18653/v1/2024.findings-emnlp.611}, pages = {10439--10455}, } - EMNLP

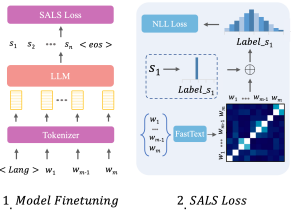

Gloss2Text: Sign Language Gloss translation using LLMs and Semantically Aware Label SmoothingPooya Fayyazsanavi, Antonios Anastasopoulos, and Jana KoseckaIn Findings of the Association for Computational Linguistics: EMNLP 2024 code here , Nov 2024

Gloss2Text: Sign Language Gloss translation using LLMs and Semantically Aware Label SmoothingPooya Fayyazsanavi, Antonios Anastasopoulos, and Jana KoseckaIn Findings of the Association for Computational Linguistics: EMNLP 2024 code here , Nov 2024Sign language translation from video to spoken text presents unique challenges owing to the distinct grammar, expression nuances, and high variation of visual appearance across different speakers and contexts. Gloss annotations serve as an intermediary to guide the translation process. In our work, we focus on \textitGloss2Text translation stage and propose several advances by leveraging pre-trained large language models (LLMs), data augmentation, and novel label-smoothing loss function exploiting gloss translation ambiguities improving significantly the performance of state-of-the-art approaches. Through extensive experiments and ablation studies on the PHOENIX Weather 2014T dataset, our approach surpasses state-of-the-art performance in \textitGloss2Text translation, indicating its efficacy in addressing sign language translation and suggesting promising avenues for future research and development.

@inproceedings{fayyazsanavi-etal-2024-gloss2text, title = {{G}loss2{T}ext: Sign Language Gloss translation using {LLM}s and Semantically Aware Label Smoothing}, author = {Fayyazsanavi, Pooya and Anastasopoulos, Antonios and Kosecka, Jana}, editor = {Al-Onaizan, Yaser and Bansal, Mohit and Chen, Yun-Nung}, booktitle = {Findings of the Association for Computational Linguistics: EMNLP 2024}, month = nov, year = {2024}, address = {Miami, Florida, USA}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.findings-emnlp.947/}, doi = {10.18653/v1/2024.findings-emnlp.947}, pages = {16162--16171}, } - IWSLTFINDINGS OF THE IWSLT 2024 EVALUATION CAMPAIGNIbrahim Said Ahmad, Antonios Anastasopoulos, Ondřej Bojar, Claudia Borg, Marine Carpuat, Roldano Cattoni, Mauro Cettolo, William Chen, Qianqian Dong, Marcello Federico, and 34 more authorsIn Proceedings of the 21st International Conference on Spoken Language Translation (IWSLT 2024), Aug 2024

This paper reports on the shared tasks organized by the 21st IWSLT Conference. The shared tasks address 7 scientific challenges in spoken language translation: simultaneous and offline translation, automatic subtitling and dubbing, speech-to-speech translation, dialect and low-resource speech translation, and Indic languages. The shared tasks attracted 17 teams whose submissions are documented in 27 system papers. The growing interest towards spoken language translation is also witnessed by the constantly increasing number of shared task organizers and contributors to the overview paper, almost evenly distributed across industry and academia.

@inproceedings{ahmad-etal-2024-findings, title = {{FINDINGS} {OF} {THE} {IWSLT} 2024 {EVALUATION} {CAMPAIGN}}, author = {Ahmad, Ibrahim Said and Anastasopoulos, Antonios and Bojar, Ond{\v{r}}ej and Borg, Claudia and Carpuat, Marine and Cattoni, Roldano and Cettolo, Mauro and Chen, William and Dong, Qianqian and Federico, Marcello and Haddow, Barry and Javorsk{\'y}, D{\'a}vid and Krubi{\'n}ski, Mateusz and Lam, Tsz Kin and Ma, Xutai and Mathur, Prashant and Matusov, Evgeny and Maurya, Chandresh and McCrae, John and Murray, Kenton and Nakamura, Satoshi and Negri, Matteo and Niehues, Jan and Niu, Xing and Ojha, Atul Kr. and Ortega, John and Papi, Sara and Pol{\'a}k, Peter and Posp{\'i}{\v{s}}il, Adam and Pecina, Pavel and Salesky, Elizabeth and Sethiya, Nivedita and Sarkar, Balaram and Shi, Jiatong and Sikasote, Claytone and Sperber, Matthias and St{\"u}ker, Sebastian and Sudoh, Katsuhito and Thompson, Brian and Waibel, Alex and Watanabe, Shinji and Wilken, Patrick and Zem{\'a}nek, Petr and Zevallos, Rodolfo}, editor = {Salesky, Elizabeth and Federico, Marcello and Carpuat, Marine}, booktitle = {Proceedings of the 21st International Conference on Spoken Language Translation (IWSLT 2024)}, month = aug, year = {2024}, address = {Bangkok, Thailand (in-person and online)}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.iwslt-1.1/}, doi = {10.18653/v1/2024.iwslt-1.1}, pages = {1--11}, } - LREC-COLING

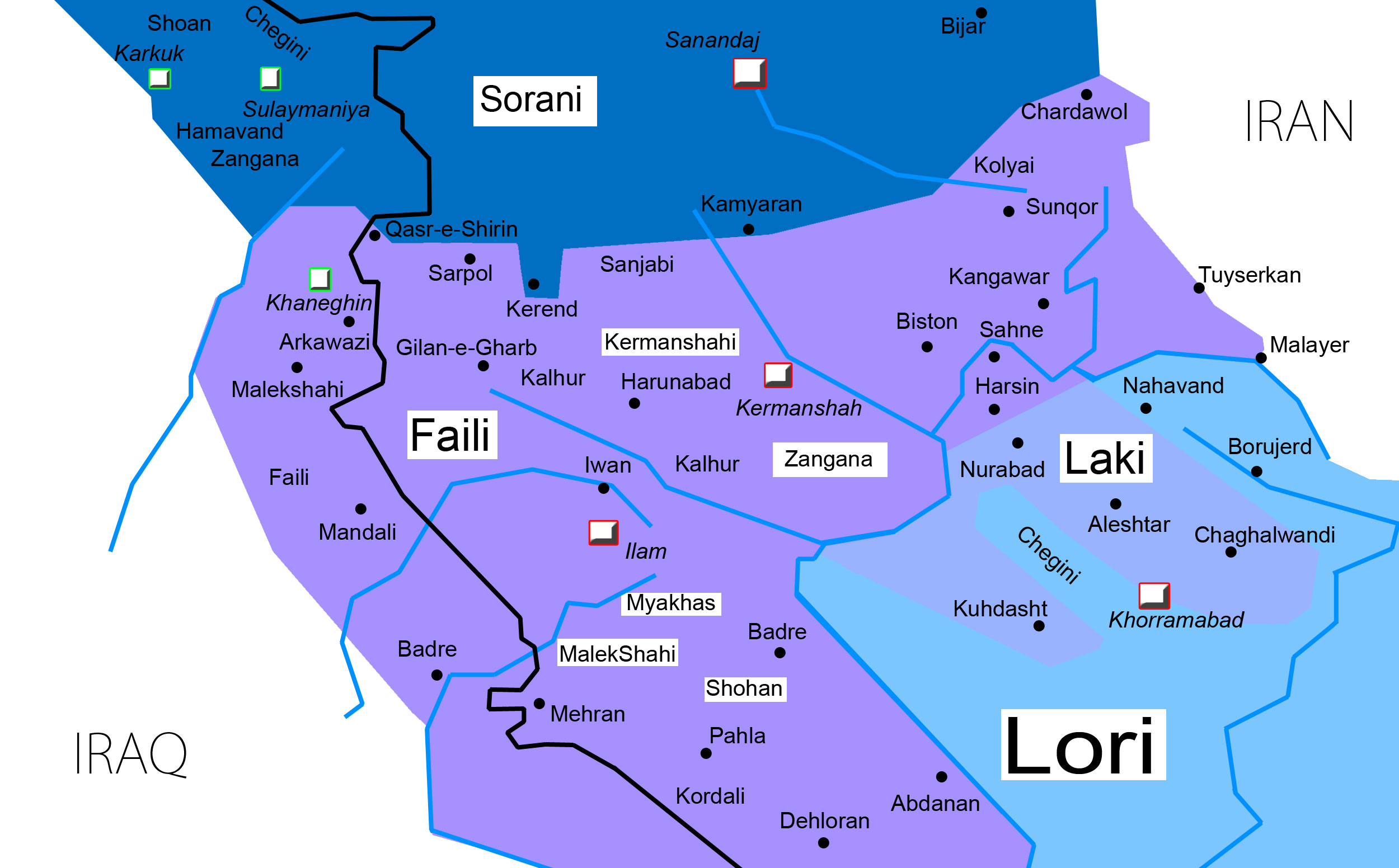

Language and Speech Technology for Central Kurdish VarietiesSina Ahmadi, Daban Jaff, Md Mahfuz Ibn Alam, and Antonios AnastasopoulosIn Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024) code here , May 2024

Language and Speech Technology for Central Kurdish VarietiesSina Ahmadi, Daban Jaff, Md Mahfuz Ibn Alam, and Antonios AnastasopoulosIn Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024) code here , May 2024Kurdish, an Indo-European language spoken by over 30 million speakers, is considered a dialect continuum and known for its diversity in language varieties. Previous studies addressing language and speech technology for Kurdish handle it in a monolithic way as a macro-language, resulting in disparities for dialects and varieties for which there are few resources and tools available. In this paper, we take a step towards developing resources for language and speech technology for varieties of Central Kurdish, creating a corpus by transcribing movies and TV series as an alternative to fieldwork. Additionally, we report the performance of machine translation, automatic speech recognition, and language identification as downstream tasks evaluated on Central Kurdish subdialects. Data and models are publicly available under an open license at https://github.com/sinaahmadi/CORDI.

@inproceedings{ahmadi-etal-2024-language, title = {Language and Speech Technology for {C}entral {K}urdish Varieties}, author = {Ahmadi, Sina and Jaff, Daban and Alam, Md Mahfuz Ibn and Anastasopoulos, Antonios}, editor = {Calzolari, Nicoletta and Kan, Min-Yen and Hoste, Veronique and Lenci, Alessandro and Sakti, Sakriani and Xue, Nianwen}, booktitle = {Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024)}, month = may, year = {2024}, address = {Torino, Italia}, publisher = {ELRA and ICCL}, url = {https://aclanthology.org/2024.lrec-main.877/}, pages = {10034--10045}, } - MRL

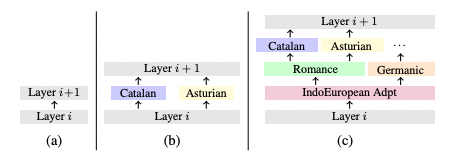

An Efficient Approach for Studying Cross-Lingual Transfer in Multilingual Language ModelsFahim Faisal, and Antonios AnastasopoulosIn Proceedings of the Fourth Workshop on Multilingual Representation Learning (MRL 2024) code here , Nov 2024

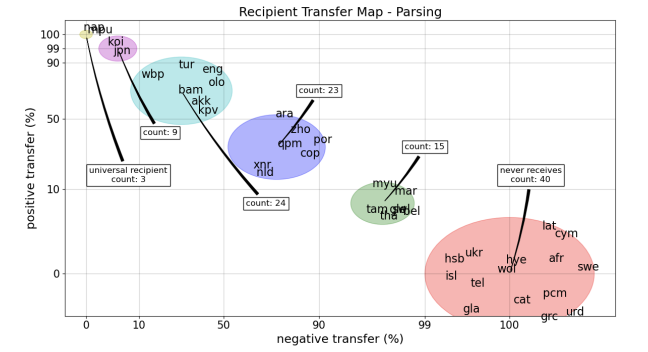

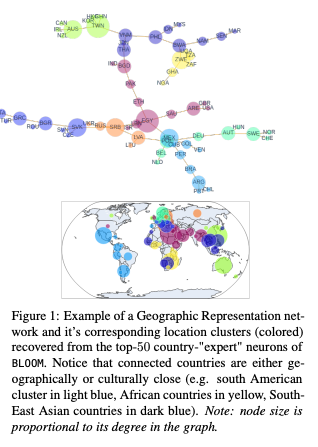

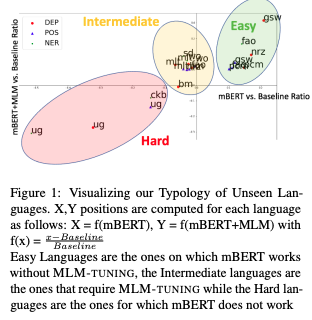

An Efficient Approach for Studying Cross-Lingual Transfer in Multilingual Language ModelsFahim Faisal, and Antonios AnastasopoulosIn Proceedings of the Fourth Workshop on Multilingual Representation Learning (MRL 2024) code here , Nov 2024The capacity and effectiveness of pre-trained multilingual models (MLMs) for zero-shot cross-lingual transfer is well established. However, phenomena of positive or negative transfer, and the effect of language choice still need to be fully understood, especially in the complex setting of massively multilingual LMs. We propose an \textitefficient method to study transfer language influence in zero-shot performance on another target language. Unlike previous work, our approach \textitdisentangles downstream tasks from language, using dedicated adapter units. Our findings suggest that some languages do not largely affect others, while some languages, especially ones unseen during pre-training, can be extremely beneficial or detrimental for different target languages. We find that no transfer language is beneficial for all target languages. We do, curiously, observe languages previously unseen by MLMs consistently benefit from transfer from \textitalmost any language. We additionally use our modular approach to quantify negative interference efficiently and categorize languages accordingly. Furthermore, we provide a list of promising transfer-target language configurations that consistently lead to target language performance improvements.

@inproceedings{faisal-anastasopoulos-2024-efficient, title = {An Efficient Approach for Studying Cross-Lingual Transfer in Multilingual Language Models}, author = {Faisal, Fahim and Anastasopoulos, Antonios}, editor = {S{\"a}lev{\"a}, Jonne and Owodunni, Abraham}, booktitle = {Proceedings of the Fourth Workshop on Multilingual Representation Learning (MRL 2024)}, month = nov, year = {2024}, address = {Miami, Florida, USA}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.mrl-1.4/}, doi = {10.18653/v1/2024.mrl-1.4}, pages = {45--92}, } - NAACL

Global Gallery: The Fine Art of Painting Culture Portraits through Multilingual Instruction TuningAnjishnu Mukherjee, Aylin Caliskan, Ziwei Zhu, and Antonios AnastasopoulosIn Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers) code here , Jun 2024

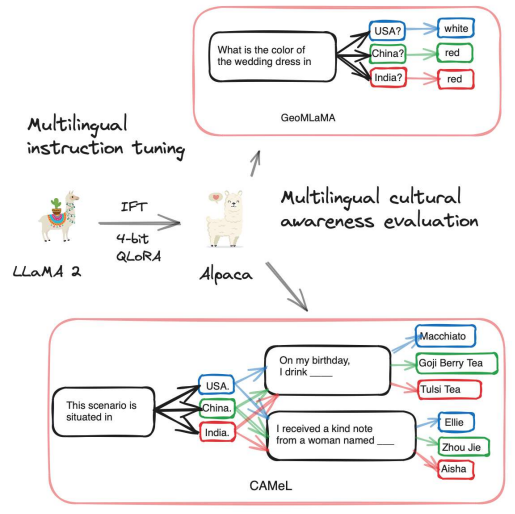

Global Gallery: The Fine Art of Painting Culture Portraits through Multilingual Instruction TuningAnjishnu Mukherjee, Aylin Caliskan, Ziwei Zhu, and Antonios AnastasopoulosIn Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers) code here , Jun 2024Exploring the intersection of language and culture in Large Language Models (LLMs), this study critically examines their capability to encapsulate cultural nuances across diverse linguistic landscapes. Central to our investigation are three research questions: the efficacy of language-specific instruction tuning, the impact of pretraining on dominant language data, and the identification of optimal approaches to elicit accurate cultural knowledge from LLMs. Utilizing the GeoMLaMA benchmark for multilingual commonsense knowledge and an adapted CAMeL dataset (English-only) for evaluation of nuanced cultural aspects, our experiments span six different languages and cultural contexts, revealing the extent of LLMs’ cultural awareness. Our findings highlight a nuanced landscape: while language-specific tuning and bilingual pretraining enhance cultural understanding in certain contexts, they also uncover inconsistencies and biases, particularly in non-Western cultures. This work expands our understanding of LLMs’ cultural competence and emphasizes the importance of integrating diverse cultural perspectives in their development, aiming for a more globally representative and equitable approach in language modeling.

@inproceedings{mukherjee-etal-2024-global, title = {Global Gallery: The Fine Art of Painting Culture Portraits through Multilingual Instruction Tuning}, author = {Mukherjee, Anjishnu and Caliskan, Aylin and Zhu, Ziwei and Anastasopoulos, Antonios}, editor = {Duh, Kevin and Gomez, Helena and Bethard, Steven}, booktitle = {Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers)}, month = jun, year = {2024}, address = {Mexico City, Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.naacl-long.355/}, doi = {10.18653/v1/2024.naacl-long.355}, pages = {6398--6415}, } - NAACL

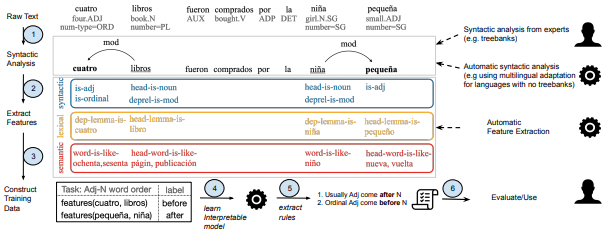

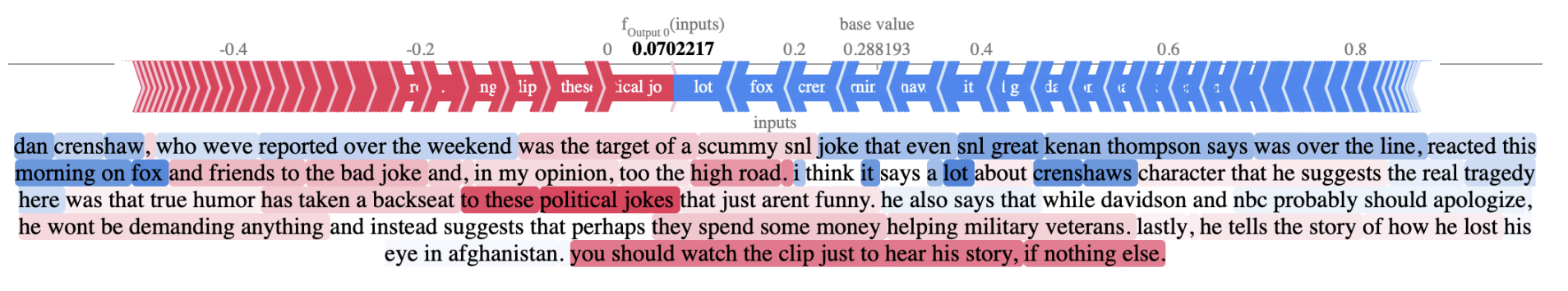

Extracting Lexical Features from Dialects via Interpretable Dialect ClassifiersRoy Xie, Orevaoghene Ahia, Yulia Tsvetkov, and Antonios AnastasopoulosIn Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 2: Short Papers) code here , Jun 2024

Extracting Lexical Features from Dialects via Interpretable Dialect ClassifiersRoy Xie, Orevaoghene Ahia, Yulia Tsvetkov, and Antonios AnastasopoulosIn Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 2: Short Papers) code here , Jun 2024Identifying linguistic differences between dialects of a language often requires expert knowledge and meticulous human analysis. This is largely due to the complexity and nuance involved in studying various dialects. We present a novel approach to extract distinguishing lexical features of dialects by utilizing interpretable dialect classifiers, even in the absence of human experts. We explore both post-hoc and intrinsic approaches to interpretability, conduct experiments on Mandarin, Italian, and Low Saxon, and experimentally demonstrate that our method successfully identifies key language-specific lexical features that contribute to dialectal variations.

@inproceedings{xie-etal-2024-extracting, title = {Extracting Lexical Features from Dialects via Interpretable Dialect Classifiers}, author = {Xie, Roy and Ahia, Orevaoghene and Tsvetkov, Yulia and Anastasopoulos, Antonios}, editor = {Duh, Kevin and Gomez, Helena and Bethard, Steven}, booktitle = {Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 2: Short Papers)}, month = jun, year = {2024}, address = {Mexico City, Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.naacl-short.5/}, doi = {10.18653/v1/2024.naacl-short.5}, pages = {54--69}, } - NLP4PI

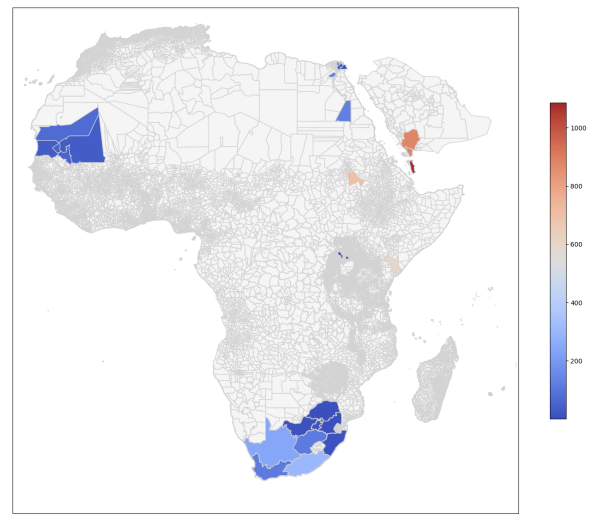

From Text to Maps: LLM-Driven Extraction and Geotagging of Epidemiological DataKarlyn K. Harrod*, Prabin Bhandari*, and Antonios AnastasopoulosIn Proceedings of the Third Workshop on NLP for Positive Impact code here , Nov 2024

From Text to Maps: LLM-Driven Extraction and Geotagging of Epidemiological DataKarlyn K. Harrod*, Prabin Bhandari*, and Antonios AnastasopoulosIn Proceedings of the Third Workshop on NLP for Positive Impact code here , Nov 2024Best Paper Award

Epidemiological datasets are essential for public health analysis and decision-making, yet they remain scarce and often difficult to compile due to inconsistent data formats, language barriers, and evolving political boundaries. Traditional methods of creating such datasets involve extensive manual effort and are prone to errors in accurate location extraction. To address these challenges, we propose utilizing large language models (LLMs) to automate the extraction and geotagging of epidemiological data from textual documents. Our approach significantly reduces the manual effort required, limiting human intervention to validating a subset of records against text snippets and verifying the geotagging reasoning, as opposed to reviewing multiple entire documents manually to extract, clean, and geotag. Additionally, the LLMs identify information often overlooked by human annotators, further enhancing the dataset‘s completeness. Our findings demonstrate that LLMs can be effectively used to semi-automate the extraction and geotagging of epidemiological data, offering several key advantages: (1) comprehensive information extraction with minimal risk of missing critical details; (2) minimal human intervention; (3) higher-resolution data with more precise geotagging; and (4) significantly reduced resource demands compared to traditional methods.

@inproceedings{harrod-etal-2024-text, title = {From Text to Maps: {LLM}-Driven Extraction and Geotagging of Epidemiological Data}, author = {Harrod, Karlyn K. and Bhandari, Prabin and Anastasopoulos, Antonios}, editor = {Dementieva, Daryna and Ignat, Oana and Jin, Zhijing and Mihalcea, Rada and Piatti, Giorgio and Tetreault, Joel and Wilson, Steven and Zhao, Jieyu}, booktitle = {Proceedings of the Third Workshop on NLP for Positive Impact}, month = nov, year = {2024}, address = {Miami, Florida, USA}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.nlp4pi-1.24/}, doi = {10.18653/v1/2024.nlp4pi-1.24}, pages = {258--270}, } - VarDialData-Augmentation-Based Dialectal Adaptation for LLMsFahim Faisal, and Antonios AnastasopoulosIn Proceedings of the Eleventh Workshop on NLP for Similar Languages, Varieties, and Dialects (VarDial 2024) code here , Jun 2024

This report presents gmnlp‘s participation to the Dialect-Copa shared task at VarDial 2024 (Chifu et al., 2024), which focuses on evaluating the commonsense reasoning capabilities of large language models (LLMs) on South Slavic micro-dialects. The task aims to assess how well LLMs can handle non-standard dialectal varieties, as their performance on standard languages is already well-established. We propose an approach that combines the strengths of different types of language models and leverages data augmentation techniques to improve task performance on three South Slavic dialects: Chakavian, Cherkano, and Torlak. We conduct experiments using a language-family-focused encoder-based model (BERTić) and a domain-agnostic multilingual model (AYA-101). Our results demonstrate that the proposed data augmentation techniques lead to substantial performance gains across all three test datasets in the open-source model category. This work highlights the practical utility of data augmentation and the potential of LLMs in handling non-standard dialectal varieties, contributing to the broader goal of advancing natural language understanding in low-resource and dialectal settings.

@inproceedings{faisal-anastasopoulos-2024-data, title = {Data-Augmentation-Based Dialectal Adaptation for {LLM}s}, author = {Faisal, Fahim and Anastasopoulos, Antonios}, editor = {Scherrer, Yves and Jauhiainen, Tommi and Ljube{\v{s}}i{\'c}, Nikola and Zampieri, Marcos and Nakov, Preslav and Tiedemann, J{\"o}rg}, booktitle = {Proceedings of the Eleventh Workshop on NLP for Similar Languages, Varieties, and Dialects (VarDial 2024)}, month = jun, year = {2024}, address = {Mexico City, Mexico}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.vardial-1.17/}, doi = {10.18653/v1/2024.vardial-1.17}, pages = {197--208}, } - EmoMix-3L: A Code-Mixed Dataset for Bangla-English-Hindi for Emotion DetectionNishat Raihan, Dhiman Goswami, Antara Mahmud, Antonios Anastasopoulos, and Marcos ZampieriIn Proceedings of the 7th Workshop on Indian Language Data: Resources and Evaluation code here , May 2024

Code-mixing is a well-studied linguistic phenomenon that occurs when two or more languages are mixed in text or speech. Several studies have been conducted on building datasets and performing downstream NLP tasks on code-mixed data. Although it is not uncommon to observe code-mixing of three or more languages, most available datasets in this domain contain code-mixed data from only two languages. In this paper, we introduce EmoMix-3L, a novel multi-label emotion detection dataset containing code-mixed data from three different languages. We experiment with several models on EmoMix-3L and we report that MuRIL outperforms other models on this dataset.

@inproceedings{raihan-etal-2024-emomix, title = {{E}mo{M}ix-3{L}: A Code-Mixed Dataset for {B}angla-{E}nglish-{H}indi for Emotion Detection}, author = {Raihan, Nishat and Goswami, Dhiman and Mahmud, Antara and Anastasopoulos, Antonios and Zampieri, Marcos}, editor = {Jha, Girish Nath and L., Sobha and Bali, Kalika and Ojha, Atul Kr.}, booktitle = {Proceedings of the 7th Workshop on Indian Language Data: Resources and Evaluation}, month = may, year = {2024}, address = {Torino, Italia}, publisher = {ELRA and ICCL}, url = {https://aclanthology.org/2024.wildre-1.2/}, pages = {11--16}, } - WMTFindings of the WMT 2024 Shared Task of the Open Language Data InitiativeJean Maillard, Laurie Burchell, Antonios Anastasopoulos, Christian Federmann, Philipp Koehn, and Skyler WangIn Proceedings of the Ninth Conference on Machine Translation, Nov 2024

We present the results of the WMT 2024 shared task of the Open Language Data Initiative. Participants were invited to contribute to the FLORES+ and MT Seed multilingual datasets, two foundational open resources that facilitate the organic expansion of language technology‘s reach. We accepted ten submissions covering 16 languages, which extended the range of languages included in the datasets and improved the quality of existing data.

@inproceedings{maillard-etal-2024-findings, title = {Findings of the {WMT} 2024 Shared Task of the Open Language Data Initiative}, author = {Maillard, Jean and Burchell, Laurie and Anastasopoulos, Antonios and Federmann, Christian and Koehn, Philipp and Wang, Skyler}, editor = {Haddow, Barry and Kocmi, Tom and Koehn, Philipp and Monz, Christof}, booktitle = {Proceedings of the Ninth Conference on Machine Translation}, month = nov, year = {2024}, address = {Miami, Florida, USA}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.wmt-1.4/}, doi = {10.18653/v1/2024.wmt-1.4}, pages = {110--117}, } - SIGSPATIAL

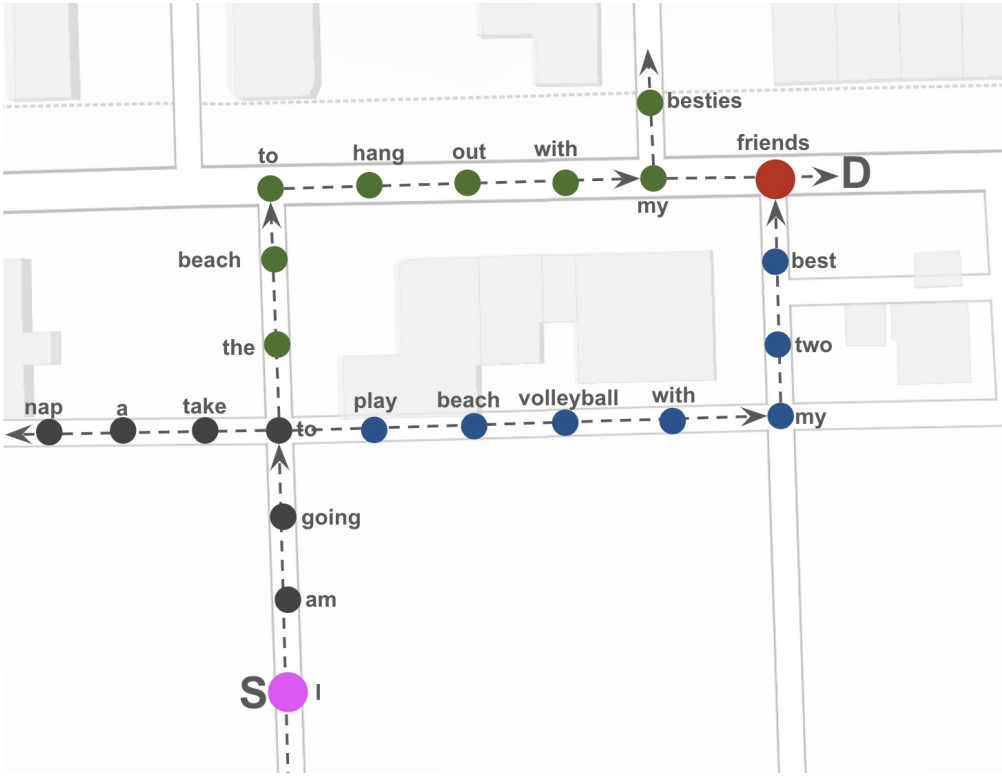

Urban Mobility Assessment Using LLMsPrabin Bhandari, Antonios Anastasopoulos, and Dieter PfoserIn Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems, Atlanta, GA, USA, Nov 2024

Urban Mobility Assessment Using LLMsPrabin Bhandari, Antonios Anastasopoulos, and Dieter PfoserIn Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems, Atlanta, GA, USA, Nov 2024Best Research Paper of the Conference

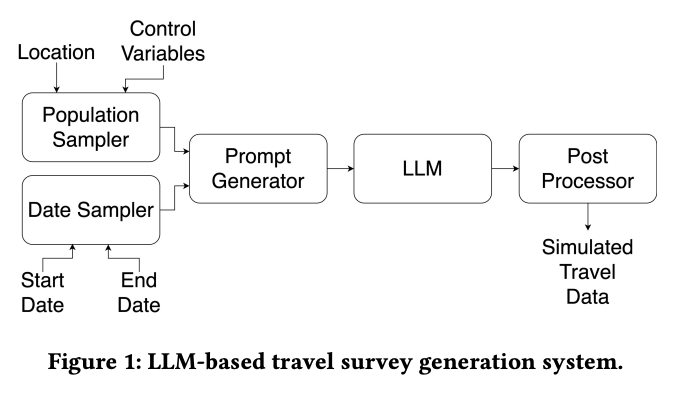

In urban science, understanding mobility patterns and analyzing how people move around cities helps improve the overall quality of life and supports the development of more livable, efficient, and sustainable urban areas. A challenging aspect of this work is the collection of mobility data through user tracking or travel surveys, given the associated privacy concerns, noncompliance, and high cost. This work proposes an innovative AI-based approach for synthesizing travel surveys by prompting large language models (LLMs), aiming to leverage their vast amount of relevant background knowledge and text generation capabilities. Our study evaluates the effectiveness of this approach across various U.S. metropolitan areas by comparing the results against existing survey data at different granularity levels. These levels include (i) pattern level, which compares aggregated metrics such as the average number of locations traveled and travel time, (ii) trip level, which focuses on comparing trips as whole units using transition probabilities, and (iii) activity chain level, which examines the sequence of locations visited by individuals. Our work covers several proprietary and open-source LLMs, revealing that open-source base models like Llama-2, when fine-tuned on even a limited amount of actual data, can generate synthetic data that closely mimics the actual travel survey data and, as such, provides an argument for using such data in mobility studies.

@inproceedings{bhandari-etal-24-urban, author = {Bhandari, Prabin and Anastasopoulos, Antonios and Pfoser, Dieter}, title = {Urban Mobility Assessment Using LLMs}, year = {2024}, month = nov, isbn = {9798400711077}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3678717.3691221}, doi = {10.1145/3678717.3691221}, booktitle = {Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems}, pages = {67–79}, numpages = {13}, keywords = {Large Language Models, Travel Data, Travel Survey, Travel Survey Data Simulation}, location = {Atlanta, GA, USA}, series = {SIGSPATIAL '24}, } - SIGSPATIAL

Trajectory Anomaly Detection with Language ModelsJonathan Kabala Mbuya, Dieter Pfoser, and Antonios AnastasopoulosIn Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems, Atlanta, GA, USA, Nov 2024

Trajectory Anomaly Detection with Language ModelsJonathan Kabala Mbuya, Dieter Pfoser, and Antonios AnastasopoulosIn Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems, Atlanta, GA, USA, Nov 2024This paper presents a novel approach for trajectory anomaly detection using an autoregressive causal-attention model, termed LM-TAD. This method leverages the similarities between language statements and trajectories, both of which consist of ordered elements requiring coherence through external rules and contextual variations. By treating trajectories as sequences of tokens, our model learns the probability distributions over trajectories, enabling the identification of anomalous locations with high precision. We incorporate user-specific tokens to account for individual behavior patterns, enhancing anomaly detection tailored to user context. Our experiments demonstrate the effectiveness of LM-TAD on both synthetic and real-world datasets. In particular, the model outperforms existing methods on the Pattern of Life (PoL) dataset by detecting user-contextual anomalies and achieves competitive results on the Porto taxi dataset, highlighting its adaptability and robustness. Additionally, we introduce the use of perplexity and surprisal rate metrics for detecting outliers and pinpointing specific anomalous locations within trajectories. The LM-TAD framework supports various trajectory representations, including GPS coordinates, staypoints, and activity types, proving its versatility in handling diverse trajectory data. Moreover, our approach is well-suited for online trajectory anomaly detection, significantly reducing computational latency by caching key-value states of the attention mechanism, thereby avoiding repeated computations. The code to reproduce experiments in this paper can be found at the following link: https://github.com/jonathankabala/LMTAD.

@inproceedings{10.1145/3678717.3691257, author = {Mbuya, Jonathan Kabala and Pfoser, Dieter and Anastasopoulos, Antonios}, title = {Trajectory Anomaly Detection with Language Models}, year = {2024}, month = nov, isbn = {9798400711077}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3678717.3691257}, doi = {10.1145/3678717.3691257}, booktitle = {Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems}, pages = {208–219}, numpages = {12}, keywords = {Anomalous Trajectories, Anomaly Detection, Language Modeling, Self-Supervised Learning, Trajectory Data}, location = {Atlanta, GA, USA}, series = {SIGSPATIAL '24}, } - JAMIAClinical risk prediction using language models: benefits and considerationsAngeela Acharya, Sulabh Shrestha, Anyi Chen, Joseph Conte, Sanja Avramovic, Siddhartha Sikdar, Antonios Anastasopoulos, and Sanmay DasJournal of the American Medical Informatics Association, Feb 2024

The use of electronic health records (EHRs) for clinical risk prediction is on the rise. However, in many practical settings, the limited availability of task-specific EHR data can restrict the application of standard machine learning pipelines. In this study, we investigate the potential of leveraging language models (LMs) as a means to incorporate supplementary domain knowledge for improving the performance of various EHR-based risk prediction tasks.We propose two novel LM-based methods, namely “LLaMA2-EHR” and “Sent-e-Med.” Our focus is on utilizing the textual descriptions within structured EHRs to make risk predictions about future diagnoses. We conduct a comprehensive comparison with previous approaches across various data types and sizes.Experiments across 6 different methods and 3 separate risk prediction tasks reveal that employing LMs to represent structured EHRs, such as diagnostic histories, results in significant performance improvements when evaluated using standard metrics such as area under the receiver operating characteristic (ROC) curve and precision-recall (PR) curve. Additionally, they offer benefits such as few-shot learning, the ability to handle previously unseen medical concepts, and adaptability to various medical vocabularies. However, it is noteworthy that outcomes may exhibit sensitivity to a specific prompt.LMs encompass extensive embedded knowledge, making them valuable for the analysis of EHRs in the context of risk prediction. Nevertheless, it is important to exercise caution in their application, as ongoing safety concerns related to LMs persist and require continuous consideration.

@article{10.1093/jamia/ocae030, author = {Acharya, Angeela and Shrestha, Sulabh and Chen, Anyi and Conte, Joseph and Avramovic, Sanja and Sikdar, Siddhartha and Anastasopoulos, Antonios and Das, Sanmay}, title = {Clinical risk prediction using language models: benefits and considerations}, journal = {Journal of the American Medical Informatics Association}, volume = {31}, number = {9}, pages = {1856-1864}, year = {2024}, month = feb, issn = {1527-974X}, doi = {10.1093/jamia/ocae030}, url = {https://doi.org/10.1093/jamia/ocae030}, eprint = {https://academic.oup.com/jamia/article-pdf/31/9/1856/58868302/ocae030.pdf}, } - AIES

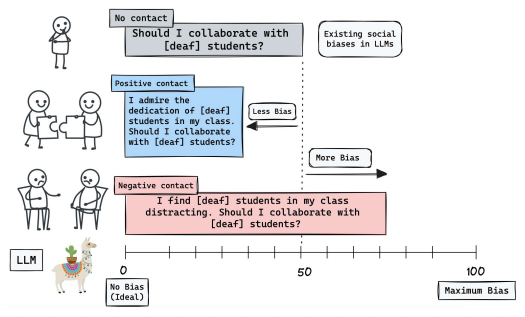

Breaking Bias, Building Bridges: Evaluation and Mitigation of Social Biases in LLMs via Contact HypothesisChahat Raj, Anjishnu Mukherjee, Aylin Caliskan, Antonios Anastasopoulos, and Ziwei ZhuProceedings of the AAAI/ACM Conference on AI, Ethics, and Society Code here , Oct 2024